Insights from "Code Llama: Open Foundation Models for Code"

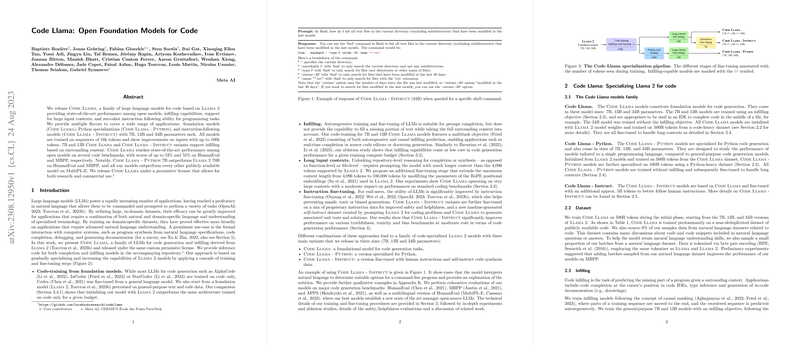

The paper "Code Llama: Open Foundation Models for Code" presents a family of LLMs specifically designed for code generation and infilling tasks, derived from the Llama 2 architecture. These models, collectively referred to as Code Llama, come in various parameter sizes (7B, 13B, 34B, and 70B) and are fine-tuned for programming tasks using extensive training datasets. They include three specialized versions: Code Llama as the foundational model, Code Llama - Python specialized for Python code, and Code Llama - Instruct fine-tuned for instruction-following.

Training Methodologies and Model Variants

The models in the Code Llama family are created using a rigorous cascade of training and fine-tuning processes to specialize Llama 2 models for coding:

- Code-training from Foundation Models: Unlike prior code generation models such as AlphaCode and StarCoder, which were trained predominantly on code, Code Llama initializes with Llama 2 weights pretrained on a mixture of general-purpose text and code. This approach demonstrated superior performance for cost-equivalent training.

- Infilling Capability: Infilling training is a multitask objective combining autoregressive and causal infilling predictions, enabling the models to complete code snippets based on surrounding context, which is crucial for real-time IDE assistance and docstring generation.

- Long Input Contexts: Code Llama models are fine-tuned to handle maximum input sequences of up to 100,000 tokens using modifications in the RoPE (Rotary Position Embedding) parameters, facilitating repository-level code understanding and synthesis.

- Instruction Fine-tuning: For user-level interaction enhancement, Code Llama - Instruct models are fine-tuned on a mix of proprietary instruction data for safety and efficacy, and machine-generated self-instruction data for better performance and reduced bias.

Evaluation and Performance Metrics

The models have been rigorously evaluated on multiple benchmarks:

- HumanEval and MBPP Benchmarks: Code Llama achieves state-of-the-art scores among open models, with the 70B variant outperforming competitors significantly (e.g., 67% pass@1 on HumanEval and 65% on MBPP). Notably, Code Llama - Python 7B surpasses the Llama 2 70B model, indicating substantial gains from specialized training.

- MultiPL-E Benchmark: These models excel in multilingual contexts, addressing performance disparities across languages such as Python, C++, Java, PHP, and C#. Code Llama - Python further enhances performance, showing clear advantages over general-purpose models.

- Safety and Bias Evaluations: The paper details evaluations using TruthfulQA, ToxiGen, and BOLD datasets to measure truthfulness, toxicity, and bias, respectively. Code Llama - Instruct shows significant improvements in truthfulness and reduced toxicity, making them apt for safer deployment.

Practical and Theoretical Implications

The practical implications of Code Llama models are profound:

- Enhanced IDE Assistance: With capabilities for real-time code completion and docstring generation, these models significantly improve developer productivity.

- Code Understanding: The ability to handle long sequences is particularly valuable for understanding and improving large codebases, facilitating advanced features such as repository-wide refactoring and bug detection.

- Ethical Deployment: Instruction fine-tuning aligns the models towards safer outputs, reducing the risk of generating harmful or biased code, which is critical for ethical AI deployment.

Theoretically, the research underscores the value of integrating general-purpose pretraining with domain-specific fine-tuning. The scale and approach in handling long contexts and infilling are particularly noteworthy, suggesting pathways for future research in extending the versatility and scalability of LLMs.

Future Developments and Speculations

Looking forward, advancements might focus on:

- Enhanced Context Handling: Further extending the context handling capabilities to exceed 100,000 tokens could bridge gaps in understanding even larger repositories or complex system-level interactions.

- Cross-Language Code Synthesis: Developing unified models capable of seamlessly synthesizing code across multiple languages could reduce the overhead needed for maintaining multilingual systems.

- Adaptive Fine-tuning: Leveraging continual learning to adapt models progressively based on evolving coding standards, security practices, and contextual nuances could make these LLMs increasingly relevant and robust.

In conclusion, the Code Llama paper exemplifies a sophisticated and systematic approach to advancing code generation models, placing it at the forefront of research in AI-driven programming tools. The robust evaluations and thoughtful specializations suggest a promising trajectory for both practical applications and ongoing theoretical explorations.