This paper tackles the challenge of generating videos from a text description by transforming the usual high-dimensional video generation problem into an image generation problem. The work is important because creating videos that accurately capture both the content and sequential dynamics described in text has been much more computationally demanding than generating single images. By rethinking how to represent a video, the paper presents a method that reduces the video’s temporal complexity while maintaining high visual quality and temporal consistency.

Background and Motivation

Videos are inherently complicated because they consist of many frames that show changes over time. Traditional approaches often involve building models that must consider both spatial information (what is in each frame) and the temporal information (how frames change over time). This paper makes two critical observations:

- Existing text-to-image methods (like diffusion models) have made impressive strides recently in generating high-quality images from text.

- Video generation can benefit from a re-imagination of the representation, so that techniques proven for images can instead be applied to videos.

Core Idea: Representing Videos as Grid Images

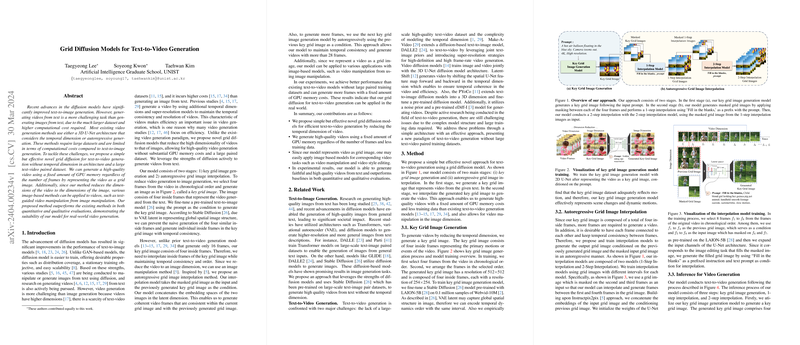

Instead of generating every frame individually or dealing directly with the entire temporal dimension, the paper proposes to represent a video as a grid image. Here’s how that works:

- Key Grid Image Generation:

- Four key frames from a video are selected in chronological order.

- These frames are arranged into a 2×2 grid to form a single “key grid image” that captures important moments of the video.

- A pre-trained text-to-image model is fine-tuned with this grid image representation, which allows it to understand and generate the spatial layout of multiple frames at once.

- Autoregressive Grid Image Interpolation:

- Since a grid image with four frames does not cover the full sequence needed in a video, an interpolation model is applied to generate intermediate frames.

- The interpolation is done in two stages: first, a “1-step interpolation” fills in the gaps between the key grid image frames by masking parts of the grid image and then “filling in the blanks” with guidance from the text prompt.

- Next, a “2-step interpolation” further refines these results by ensuring smooth transitions and temporal consistency.

- The process is autoregressive, meaning that each generated grid image is conditioned on the previous one. This ensures the video maintains a coherent progression over time.

Advantages and Extensions

This approach brings several benefits over traditional video generation techniques:

- Efficiency:

- By reducing the generation process to operations in the image domain, the method uses a fixed amount of GPU memory regardless of video length. Even when generating more frames, the memory consumption remains similar to that needed for generating a single image.

- Data Efficiency:

- The method requires a smaller text-video paired dataset compared to other state-of-the-art video generation methods.

- Flexibility:

- Representing videos as grid images means that techniques developed for image manipulation—such as style editing or other modifications—can be directly applied to videos.

- Temporal Consistency:

- Autoregressive interpolation and the use of conditions from previous grid images ensure that even though the video is generated as separate frames, the motion and content evolve coherently.

Experimental Evaluation

The paper includes thorough experiments evaluating the quality and performance of the proposed method on well-known video datasets. Some key points from the experiments include:

- The method was compared using several metrics such as CLIPSIM (which checks how well the generated frames match the text description), Frechet Video Distance (FVD), and Inception Score (IS).

- In quantitative evaluations, the new approach demonstrated state-of-the-art performance, even when trained on a relatively small paired dataset.

- Human evaluations confirmed that videos generated by this method were better matched to the text prompts, with improved motion quality and temporal consistency compared to other methods.

- Efficiency benchmarks revealed that the GPU memory used remains nearly constant regardless of video length, a significant improvement over previous approaches that see a marked increase in resource consumption with more frames.

Conclusion and Implications

The proposed grid diffusion model has shown that it is possible to simplify video generation by transforming the task into an image generation problem. By generating a key grid image and then interpolating additional frames autoregressively, the approach reduces computational demands while still capturing the necessary temporal dynamics. This method not only outperforms traditional models but also opens up new possibilities for applications such as text-guided video editing.

Overall, the work offers a promising direction for efficient and high-quality video synthesis from text, making it more accessible to generate dynamic video content using models originally built for images.