Enhancing General LLMs Performance in Domain-Specific Tasks with BLADE

Introduction to BLADE

The continuous evolution of LLMs such as GPT-3 and BERT has significantly enhanced the field of natural language processing. Despite their capability to generalize across a wide range of tasks, these models often stumble when applied to domain-specific tasks that require nuanced knowledge. Addressing this challenge, the recent work on BLADE (Black-box LLMs with small Domain-spEcific models) presents an innovative approach. Unlike conventional methods which require intensive resource consumption for fine-tuning or rely on unreliable retrieval augmentation, BLADE introduces a novel framework for leveraging domain-specific knowledge without such drawbacks.

Core Components of BLADE

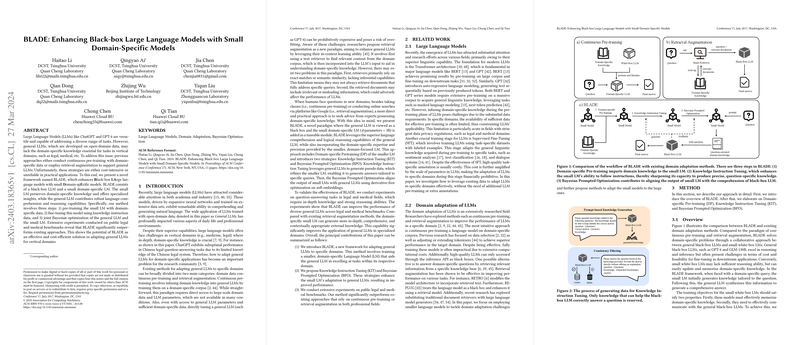

BLADE consists of a trio of steps designed to impeccably blend the domain-specific insights from smaller LLMs (LMs) with the comprehensive reasoning abilities of a general LLM. These steps include:

- Domain-specific Pretraining (DP): Here the smaller LM is pretrained on a domain-specific dataset. This step aims at infusing the model with specialized knowledge peculiar to the domain of interest.

- Knowledge Instruction Tuning (KIT): Post-pretraining, this step further tunes the small LM for its ability to generate precise, question-tailored knowledge. This is achieved through producing and refining pseudo data using knowledge instructions.

- Bayesian Prompted Optimization (BPO): This final step leverages Bayesian optimization. Operating on soft embeddings, it aligns the output of the domain-specific small LM with the general black-box LLM, ensuring that the specialized knowledge is effectively utilized.

The systematic orchestration of these steps culminates in a framework where the detailed domain knowledge is seamlessly integrated with the broad reasoning capabilities of general LLMs.

Performance Evaluation and Results

Empirical evidence underscores the efficacy of BLADE across various benchmarks in the legal and medical fields. Public benchmarks like JEC-QA for legal domain tasks and MLEC-QA for medical domain tasks were utilized to assess the framework's performance.

Highlighted results include significant improvements over existing domain adaptation methods. For instance, when applied to general LLMs, BLADE consistently demonstrated superior performance across legal and medical benchmarks. These results particularly emphasize the framework's capability to optimize the use of domain-specific knowledge within the confines of general LLM functionalities.

Theoretical and Practical Implications

The BLADE approach embarks on a new paradigm in domain adaptation for LLMs. Theoretically, it delineates a boundary-less model where domain-specific LMs are not directly fined-tuned within large LLMs but rather co-opted through a knowledge embedding process. Practically, it offers a cost-efficient alternative to the extensive pre-training or re-training of LLMs on domain-specific datasets. By utilizing smaller domain-specific models, BLADE mitigates the risk of overfitting and reduces computational costs associated with the direct adaptation of LLMs.

Future Prospects in LLM Domain Adaptation

The success of BLADE opens new vistas in the domain adaptation of LLMs. In moving forward, one could explore enhancing the knowledge generation capacity of the small LMs or improving the synergy between the domain-specific and general LLM through more refined optimization techniques. Also, extending BLADE's application to more diverse domains and tasks could further validate its adaptability and scalability.

Conclusion

BLADE represents a notable advancement in the integration of domain-specific knowledge into general LLMs. Through its innovative three-step framework, it showcases an effective means of enhancing the performance of LLMs on domain-specific tasks without the conventional challenges of resource-intensive fine-tuning or unreliable retrieval augmentation. The promising results on legal and medical benchmarks reflect its potential as a viable solution for domain adaptation challenges in AI.