Exploring the Frontier of Logic Code Simulation with LLMs

Introduction

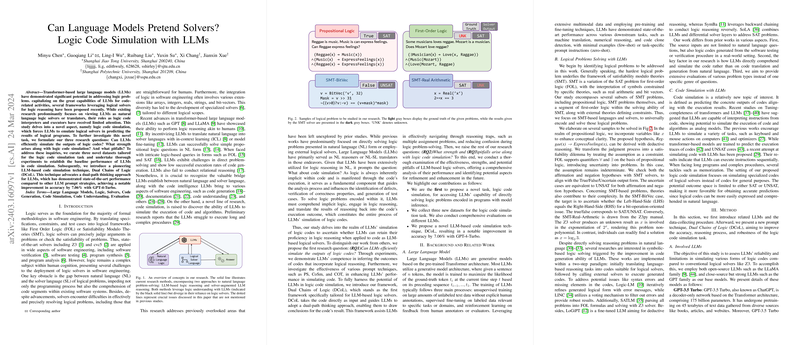

In recent advancements within the field of artificial intelligence and software engineering, the potential of transformer-based LLMs to tackle logic problems has become increasingly evident. This paper ventures into the relatively unexplored territory of using LLMs not just as tools for understanding or translating logic codes but as simulators that can predict the outcomes of logical programs. By formulating novel research questions and introducing a unique dataset and method, this paper boldly steps into assessing the capacity of LLMs to act as logic solvers themselves.

Logic Code Simulation with LLMs

At the core of this research lies the question of whether LLMs can effectively simulate logic codes, essentially emulating the output that would result from executing the logic within a program. This involves comprehending the program's logic, engaging in logic reasoning, and converting the reasoning process back into the expected outcome of code execution. Through extensive experimentation using the newly curated datasets tailored specifically for logic code simulation, this paper unveils the groundbreaking technique Dual Chains of Logic (DCoL). This method significantly outperforms existing strategies in logic code simulation, marking a substantial step forward in the capabilities of LLMs.

Dataset and Experimentation

Unique to this paper, new datasets derived from the solver community are introduced, namely Z3Tutorial, Z3Test, and SMTSim, gathering diverse logic simulation problems. These datasets are pivotal in systematically evaluating the performance of various LLMs, including GPT-3.5 Turbo, GPT-4 Turbo, and the LLaMA-2-13B models, against the proposed logic code simulation task. The innovation doesn't stop there; the introduction of the Dual Chains of Logic (DCoL) technique encourages LLMs to engage in a dual-path reasoning approach. This method substantially enhances the models' accuracy and robustness in code simulation tasks, with GPT-4-Turbo witnessing a remarkable 7.06% improvement in accuracy.

Findings and Implications

The experiments conducted reveal intriguing insights into the capabilities and limitations of current LLMs in simulating logic code. GPT series models show a strong aptitude for logic simulation, highlighting their advanced understanding and reasoning abilities. Meanwhile, the LLaMA models, though effective, exhibit a tendency to generate a higher incidence of "unknown" outcomes, suggesting a potential area for model refinement.

A notable strength of LLMs, as identified in this paper, is their capacity to process and simulate logic codes even in the presence of syntax errors, showcasing a remarkable level of robustness and flexibility. Moreover, the paper highlights LLMs' potential in transcending some of the theoretical limitations inherent to traditional solvers, providing a promising avenue for future developments in logic problem-solving.

Looking Ahead

While the results of this paper are promising, they also underscore the challenges and complexities of logic code simulation with LLMs. The DCoL method represents a significant advancement, yet there remains ample scope for refinement and exploration. Future work will aim not only to enhance the performance and applicability of DCoL but also to extend its utility beyond the field of logic solvers. The integration of LLMs with additional knowledge retrieval and storage techniques could pave the way for practical applications that efficiently simulate complex logic programs in real-life scenarios.

Conclusion

This paper marks a pivotal moment in the exploration of LLMs' capabilities as logic code simulators. By proposing a novel task, introducing a dedicated framework, and systematically evaluating the performance across various datasets and models, the research opens up new horizons in the application of LLMs in software engineering and beyond. The findings not only provide a solid foundation for future inquiry but also inspire the continued evolution of AI-driven logic simulation methodologies.