An Assessment of GPT-4V(ision) in Clinical Contexts: Diagnostic Limitations and Implications

The paper "GPT-4V(ision) Unsuitable for Clinical Care and Education: A Clinician-Evaluated Assessment" provides a thorough evaluation of OpenAI's GPT-4V(ision) model's capabilities in the field of medical imaging interpretation. This paper evaluates how effectively the model performs across a range of diagnostic modalities and if its outputs align with clinical safety and educational standards.

The GPT-4V(ision) model represents OpenAI's foray into multimodal LLMs, which accept both text and image inputs. Although multimodal learning promises to extend the capabilities of LLMs, particularly in medical disciplines, this paper's findings caution against assuming immediate suitability for clinical or educational scenarios in medicine.

Methodological Overview

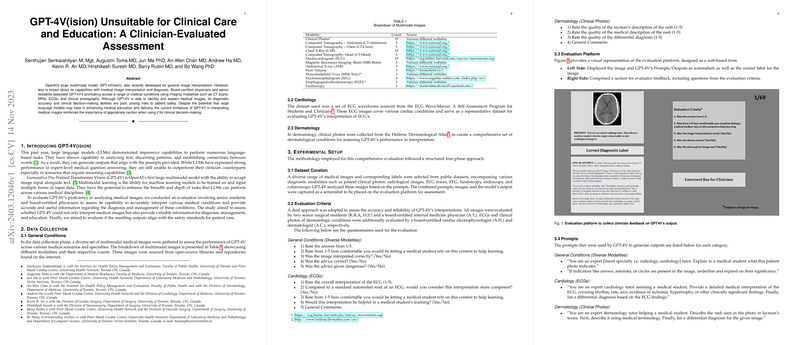

The researchers collected a dataset of diverse multimodal medical images, including CT scans, MRIs, ECGs, and clinical photographs, from open-source repositories. These images were then used to test GPT-4V’s diagnostic accuracy and decision-making capabilities under several experimental conditions designed to simulate real-world clinical and educational settings. The evaluators included board-certified physicians and senior residents with expertise in relevant fields.

Key Findings

The paper reveals several critical insights:

- Overall Diagnostic Performance: The model struggled with the interpretation and diagnostic accuracy of medical images. Out of 69 multimodal images, only 15 were accurately interpreted, while incorrect or potentially dangerous advice was given in 30 instances.

- ECG Analysis: On ECG interpretations, GPT-4V failed to reach the diagnostic competence of standard automated readings. None of the interpretations matched the competence level expected from existing automated tools, highlighting a significant gap in reliability.

- Educational Value: The model's utility as an educational resource was rated low by evaluators. The average comfort level for clinicians allowing medical students to rely on GPT-4V outputs was rated at 1.8 out of 5, with concerns on guidance safety being prevalent.

- Specialized Domains: For dermatological images, the quality of rash description and differential diagnosis was deemed inadequate, underlining the challenge of producing clinically useful outputs without proper domain-specific tuning and contextual understanding.

Implications and Future Prospects

The implications of these findings stress the need for contextual clinical expertise and caution in adopting AI models like GPT-4V in healthcare practice and education. Despite advancements in LLMs, this research underscores the importance of rigorous evaluations to understand limitations, especially when models are applied to domains where errors can significantly impact patient safety outcomes.

Moreover, the paper calls for transparency in the training datasets, which could enhance model trustworthiness and reliability in clinical contexts. There's a parallel drawn to newer approaches, such as specialized models fine-tuned for clinical applications, suggesting a potential path forward; however, these too require stringent validation.

The paper further posits that proprietary LLMs should consider aligning with open-source principles, emphasizing diverse and representative data to promote ethical AI applications. Collaborative efforts between AI developers and healthcare practitioners could spearhead the development of more nuanced models, increasing adoption potential in clinical environments while ensuring patient safety.

Conclusion

The paper concludes by asserting that while GPT-4V offers technological novelty as a multimodal model, its current form is inadequate for autonomous use in medical diagnostics or as a reliable educational tool. The necessity of incorporating human experience and oversight in medical decision-making remains clear, affirming that technological advancements should augment, rather than replace, clinical expertise. The discernment of contextual cues and responsibility in decision-making is a human trait not yet replicable by existing AI, according to the findings laid out in this comprehensive assessment.