Probing Evaluation of Large Multimodal Models in Medical VQA

Introduction

The paper "Worse than Random? An Embarrassingly Simple Probing Evaluation of Large Multimodal Models in Medical VQA" presents a critical evaluation of the reliability of state-of-the-art Large Multimodal Models (LMMs) in the domain of medical Visual Question Answering (Med-VQA). Despite the high accuracy reported on existing benchmarks, this paper reveals significant performance issues when these models are tested under more rigorous conditions.

Methodology

To address the inadequacies in current evaluation methods, the authors introduced the Probing Evaluation for Medical Diagnosis (ProbMed) dataset. ProbMed is designed to rigorously assess LMM performance via two primary principles:

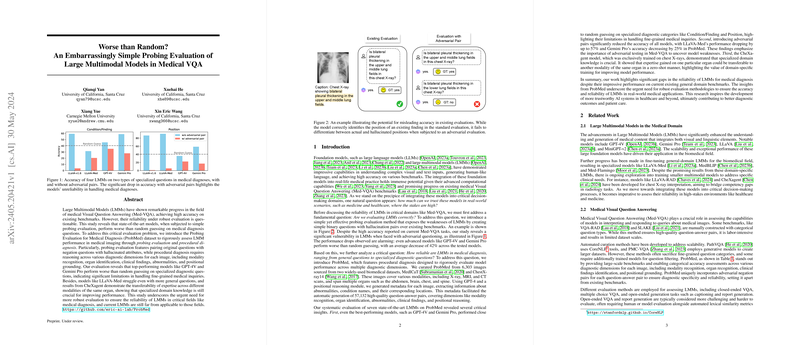

- Probing Evaluation with Adversarial Pairs: This method pairs original questions with negation questions that contain hallucinated attributes, to test the models' ability to accurately distinguish between actual medical conditions and false attributes.

- Procedural Diagnosis: This includes reasoning across multiple diagnostic dimensions such as modality recognition, organ identification, clinical findings, abnormalities, and positional grounding.

The dataset was curated from 6,303 biomedical images sourced from MedICaT and ChestX-ray14 datasets, covering various modalities and organs. Using GPT-4 and a positional reasoning module, metadata was generated to produce 57,132 high-quality question-answer pairs, ensuring a comprehensive evaluation across multiple diagnostic dimensions.

Results

Probing Evaluation Implications

The paper reveals a significant drop in accuracy when models like GPT-4V and Gemini Pro are subjected to adversarial questioning, performing worse than random guessing on specialized diagnostic questions. For instance, the inclusion of adversarial pairs in the VQA-RAD dataset test set resulted in average accuracy dropping from 77.11\% to 8.47\% for LLaVA-v1.6-7B, highlighting the LMMs' vulnerability to adversarial inputs.

In ProbMed, similar trends were observed:

- GPT-4V and Gemini Pro experienced a decrease in accuracy with adversarial pairs, showing a performance drop by 10.52\% and 25.10\% respectively.

- On more specialized diagnostic questions, even the top-performing models performed close to random guessing. For example, the accuracy in identifying conditions and their positions for GPT-4V were alarming, with accuracies of 35.19\% and 22.32\%.

Performance Across Diagnostic Questions

The categorical accuracy of different models in ProbMed indicates a significant gap in practical diagnostic capabilities:

- GPT-4V and Gemini Pro outperform other models in general tasks like modality and organ recognition but struggle with fine-grained medical inquiries.

- CheXagent, trained on chest X-rays, achieved high accuracy in determining abnormalities, indicating the importance of domain-specific training.

Error Analysis

An error analysis focusing on specialized question types (Abnormality, Condition/Finding, Position) revealed further vulnerabilities:

- Both GPT-4V and Gemini Pro exhibited significant errors in hallucinated positions, with Gemini Pro's accuracy dropping to 26.40\% on positional questions.

Transferability of Domain Expertise

The paper confirms that specialized domain knowledge enhances performance across different modalities of the same organ. CheXagent, for instance, trained exclusively on chest X-rays, showed improved accuracy on CT and MRI images of the chest, but not on images of other organs.

Conclusion and Future Directions

This paper underscores the inadequacies of current evaluations, demonstrating that LMMs like GPT-4V and Gemini Pro are not yet reliable for critical tasks in medical diagnosis. The introduction of the ProbMed dataset and its robust evaluation methods highlight the critical need for developing more trustworthy AI systems in healthcare.

Future work should focus on:

- Incorporating domain-specific expertise into LMM training.

- Continuous performance monitoring and rigorous adversarial testing.

- Expanding the evaluation to include open-ended tasks like medical report generation.

Implementing these methodologies will contribute to the development of AI systems that can be reliably integrated into real-world medical practice, ultimately improving diagnostic outcomes and patient care.