Insights from RAGGED: Optimizing Retrieval-Augmented Generation Systems

Introduction to RAGGED Framework

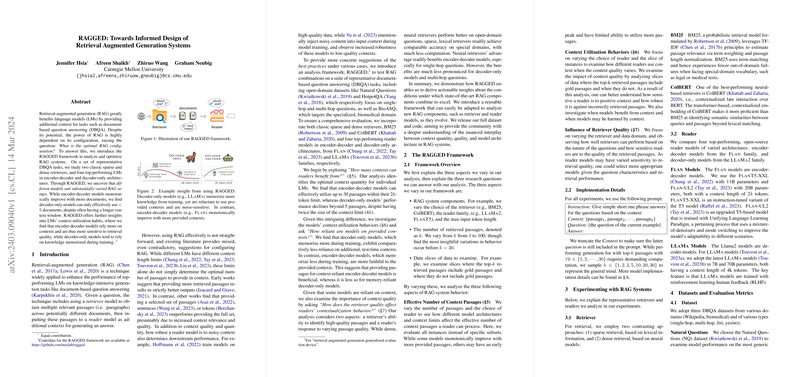

The paper introduces a comprehensive framework, RAGGED, designed for the analysis and optimization of Retrieval-Augmented Generation (RAG) systems. The motivation behind RAGGED stems from the observation that the performance of RAG systems heavily depends on the configuration of its components, notably the retriever and the reader models, as well as the quantity and quality of context documents provided. Through systematic experimentation across a diverse set of document-based question answering (DBQA) tasks, the authors investigate two main types of retrievers (sparse and dense) and evaluate four high-performing reader models from both encoder-decoder and decoder-only architectures. The paper reveals significant insights into optimal RAG setup, the effects of context quantity and quality, and the interaction between reader models and context information.

Key Findings

Optimal Number of Contexts

One of the paper's significant findings is the variation in the optimal number of documents that different reader models can effectively use. Encoder-decoder models exhibit a continuous improvement in performance with the inclusion of up to 30 documents within their token limit. In contrast, decoder-only models' performance peaks with fewer than 5 documents, despite possessing a larger context window. This discrepancy highlights the importance of tailoring the number of context documents to the specific reader model in use.

Model Dependence on Context

The paper dives deep into reader models' reliance on provided contexts versus their pre-trained knowledge. It finds that decoder-only models, characterized by a larger memorization capacity during training, show less dependence on additional contexts provided at test-time. On the other hand, encoder-decoder models demonstrate a stronger reliance on contexts, implying that they are more sensitive to the quality of the retrieval.

Impact of Retrieval Quality

Another critical aspect explored is the effect of retriever quality on RAG systems. Notably, dense retrievers like ColBERT outperform sparse retrievers (BM25) in open-domain tasks. However, this advantage diminishes in specialized domains (e.g., biomedical), where lexical retrievers offer comparable accuracy with significantly less computational expense. The paper interestingly notes that the substantial retrieval performance gaps do not always translate to equivalent disparities in downstream performance, especially in multi-hop questions and specialized domains.

Implications and Future Directions

The insights from the RAGGED framework have far-reaching implications for the design and development of RAG systems:

- Customization of RAG components: The findings underscore the importance of tailoring the number of retrieved documents and the choice of retriever and reader models based on the specific task and domain requirements.

- Model Selection: The paper provides critical guidance on selecting reader models based on their contextualization behavior and dependence on pre-trained knowledge.

- Focus on Specialized Domains: The nuanced performance of retrievers in specialized domains invites further investigation into domain-specific retrieval strategies.

Looking ahead, the RAGGED framework lays the groundwork for future explorations into the intricate dynamics of retrieval-augmented generation systems. It opens avenues for research into novel retriever and reader architectures, multi-domain RAG systems, and fine-grained analyses of context utilization behaviors.

Conclusion

Through the RAGGED framework, this paper contributes significantly to the understanding of retrieval-augmented generation systems. By meticulously analyzing various configurations and their impact on performance across several DBQA tasks, the authors provide a valuable resource for researchers and practitioners aiming to optimize RAG systems for diverse applications.