AutoDev: A Nascent Framework Transforming Software Development via Automated AI Agents

Introduction

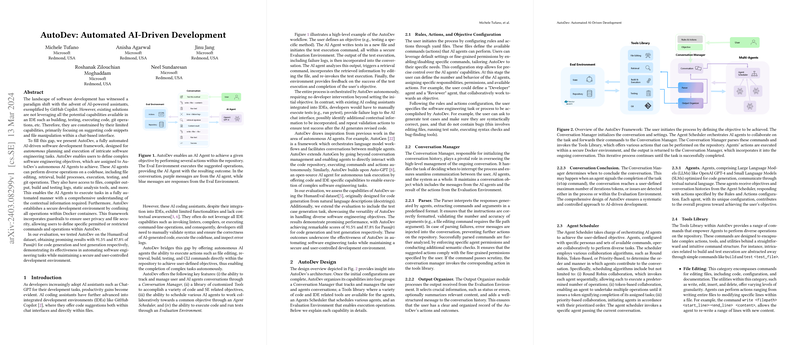

AutoDev represents a transformative approach in software engineering, leveraging autonomous AI agents to accomplish complex tasks, extending well beyond basic code suggestions to include operations like file handling, building, testing, and git actions directly within the software repository. Through an innovative architecture that integrates a Conversation Manager, a diverse Tools Library, an Agent Scheduler, and a secure Evaluation Environment, AutoDev aims to revolutionize the role of AI in software development by taking full advantage of integrated development environment (IDE) capabilities.

Key Features of AutoDev

Autonomous Operations

One of the standout features of AutoDev is its capacity for autonomous operation, enabled by AI agents that can manage a multitude of actions within a codebase, encompassing:

- File Manipulation: Extends to creating, retrieving, editing, and deleting files.

- Command Execution: Empowers agents to compile, build, and run codebases using simplified commands.

- Testing and Validation: Facilitates automated testing and validation processes, ensuring code quality without manual intervention.

- Git Operations: Allows for controlled git operation functionalities, adhering to predefined user permissions.

- Secure Environment: All operations are conducted within Docker containers, ensuring a secure development workflow.

System Architecture

The architecture of AutoDev integrates four core components:

- The Conversation Manager acts as the orchestra conductor, tracking agent-user interactions and managing processes.

- The Agent Scheduler choreographs AI agents, assigning tasks based on the project's needs.

- A Tools Library offers an accessible suite of commands for AI agents, streamlining complex actions.

- Lastly, the Evaluation Environment provides a sandboxed space for safely executing commands and scripts.

Empirical Evaluation

AutoDev underwent rigorous testing, demonstrating its effectiveness across code generation and testing tasks. The evaluation, based on the HumanEval dataset, showcases AutoDev's competent performance with strong numerical results that include a 91.5% Pass@1 rate for code generation and 87.8% for test generation. Such findings not only testify to AutoDev's proficiency in handling engineering tasks but also emphasize its potential in facilitating a more efficient and secure development process.

Implications and Future Directions

Theoretical and Practical Contributions

The introduction of AutoDev marks a significant stride in the application of AI within software development. Theoretically, it offers a novel blueprint for building AI-driven systems capable of undertaking comprehensive engineering tasks. Practically, AutoDev can significantly reduce the manual effort involved in software development processes, thereby boosting productivity and enhancing code quality.

Speculations on Future Developments

As the field of generative AI continues to evolve, AutoDev's flexible architecture allows for the integration of more advanced AI models and tools. Future enhancements may include refined multi-agent collaboration mechanisms, deeper IDE integrations, and expanded support for a wider range of software engineering tasks. Furthermore, incorporating AutoDev into Continuous Integration/Continuous Deployment (CI/CD) pipelines and Pull Request (PR) review platforms could further streamline development workflows and foster a collaborative environment between developers and AI agents.

Conclusion

AutoDev illuminates a path toward a new era of software development characterized by enhanced automation, efficiency, and security. Through its capacity to perform a broad spectrum of actions autonomously, AutoDev not only showcases the potential of integrating AI into the software development lifecycle but also paves the way for further innovations in AI-driven software engineering. The promising results obtained from its empirical evaluation underscore its efficacy and offer a glimpse into future advancements in the field of AI-empowered development environments.