AI-Assisted Unit Test Development in Software Engineering

The paper "Disrupting Test Development with AI Assistants: Building the Base of the Test Pyramid with Three AI Coding Assistants" critically examines the role of AI-assisted coding tools in unit test generation. Through the lens of the Test Pyramid framework, the authors, Vijay Joshi and Iver Band, provide an empirical assessment of three popular AI coding assistants: GitHub Copilot, ChatGPT, and Tabnine. This evaluation focuses on understanding the quality and coverage of AI-generated unit tests compared to manually created ones for open-source modules.

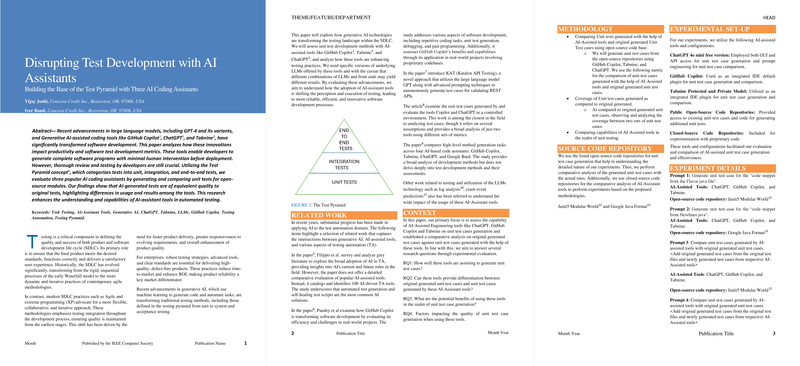

The paper is grounded in the context of the evolving Software Development Life Cycle (SDLC), highlighting the transition from traditional methods to agile and extreme programming paradigms that integrate testing throughout the development process. The paper emphasizes the increasing necessity for substantial testing strategies as a differentiator in product reliability and quality.

Methodology

The authors conducted a series of experiments to generate unit test cases for specific code snippets using GitHub Copilot, ChatGPT, and Tabnine. These experiments used open-source repositories such as Junit5 and Google Java Format to facilitate a comparative analysis. Different dimensions, including unit test coverage and functionality, were scrutinized to understand the effectiveness of these AI tools in real-world scenarios.

Results

The results from the experiments underscore that AI-generated tests achieved functional equivalency with original tests while demonstrating improved coverage in some scenarios. The analysis indicates that AI assistants can streamline the creation of unit test cases without sacrificing quality. Specifically, the tools produced unit test cases with at least 85% coverage for complex code scenarios. The paper reveals significant differences between AI-generated and original test cases in terms of testing framework versions and the comprehensive range of covered methods.

Discussion

A prominent finding emphasizes the importance of effective prompt engineering when utilizing AI-assistants. The capability of AI tools to generate relevant unit tests depended heavily on the accuracy of the prompts. While these tools excel in generating unit tests for sections of code highlighted specifically, they require precise prompts to produce accurate results for intricate scenarios.

The tools evaluated provide developers with a more efficient means of achieving extensive code coverage. Yet, developers are still responsible for reviewing and refining AI-generated tests to ensure applicability to complex and real-world projects. This emphasizes the role of AI as an assistant rather than a replacement, whereby it can significantly reduce the marginal cost of additional testing but require human expertise for final validation.

Conclusion and Implications

The exploration of AI-assisted tools indicates potential improvements in unit test development, with each tool offering unique strengths. For instance, GitHub Copilot integrates well within the open-source community, and Tabnine offers robust security compliance features, critical for projects necessitating higher privacy standards. The research suggests future avenues for expanding AI's role in the broader spectrum of testing beyond just unit tests to include integration and End-to-End Testing using various LLMs.

This work adds value to the ongoing discourse on the role of generative AI in software engineering by providing a structured evaluation of existing AI tools in the field. The results delineate the current capabilities and limitations of these AI tools, guiding future research to enhance the use of AI in more comprehensive and automated software testing strategies.