Leveraging LLMs for Enhanced Retrieval-Augmented Task Performance with Arl2

Introduction to Arl2

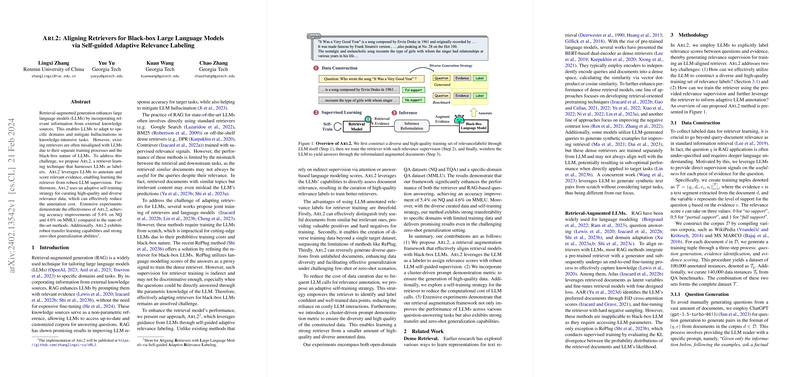

Arl2 represents a novel approach in the space of retrieval-augmented generation (RAG) by addressing the critical challenge of aligning retrievers with LLMs. This challenge stems from the traditional training processes of retrievers and LLMs, which are often siloed, resulting in a misalignment that hinders the effective utilization of external knowledge sources. Arl2 introduces a methodology that closes this gap by employing LLMs as annotators for generating relevance labels, a strategy designed to train retrievers under robust LLM supervision. This approach not only enhances the alignment between retrievers and LLMs but also offers an adaptive self-training strategy aimed at curating high-quality and diverse relevance data. The ultimate goal is to mitigate the annotation cost while improving the performance of LLMs across various knowledge-intensive tasks.

Methodology Overview

Arl2 stands out through its self-guided adaptive relevance labeling, a significant departure from indirect supervision methods, allowing for direct assessment of document relevance by LLMs. The core aspects of Arl2’s methodology include:

- Data Construction: By leveraging the LLM to create training tuples, Arl2 ensures the generation of diverse questions and evidence, supported by relevance scores. This approach facilitates the training of a retriever model with the ability to discern truly relevant documents from a pool of similar but irrelevant ones.

- Retrieval Model Learning: Incorporating a learning objective that includes both pairwise and list-wise losses, Arl2 fine-tunes the retriever to improve its performance significantly. Crucially, the adaptive relevance labeling strategy allows for the efficient use of LLM-generated annotations, reducing dependence on costly LLM interactions.

- Inference Process: At this stage, Arl2 emphasizes the importance of effectively augmenting support evidence for LLMs. This involves reordering documents based on relevance and employing an ensemble method to calculate the final answer score, ensuring robustness in the LLM’s utilization of external knowledge.

Experimental Insights

Empirical evaluation across various datasets, including open-domain question answering (QA) tasks like Natural Questions (NQ) and specific-domain QA tasks like the Massive Multitask Language Understanding (MMLU), demonstrates Arl2’s superior performance. Key findings include:

- Arl2 records accuracy improvements of 5.4% on NQ and 4.6% on MMLU, showcasing notable gains over state-of-the-art methods.

- The framework exhibits strong transfer learning capabilities, also delivering promising results in zero-shot generalization settings.

- The adaptivity of Arl2 to specific domains, underpinned by its self-training strategy and the generation of diverse questions, further underscores its utility in enhancing the performance of LLMs.

Theoretical and Practical Implications

Arl2’s approach to leveraging LLMs for relevance labeling and retriever training has both theoretical and practical implications. Theoretically, it presents a unique perspective on aligning retrieval processes with the intrinsic capabilities of LLMs, challenging existing paradigms in the field. Practically, the methodology introduces a cost-effective mechanism for enhancing LLM performance across a range of tasks, offering insights that could be pivotal for future developments in AI.

Future Directions

While Arl2 marks a significant advancement in retrieval-augmented generation, there are avenues for further exploration. Enhancing efficiency in relevance data curation, expanding the diversity of the training data, and exploring the framework’s applicability to more specialized domains are potential areas for future research. These endeavors will deepen our understanding of the interplay between retrievers and LLMs, paving the way for more nuanced and effective AI systems.

Conclusion

Arl2 embodies a significant stride toward resolving the challenge of misalignment between retrievers and LLMs in the context of retrieval-augmented generation. Through its innovative use of LLMs for relevance labeling and an adaptive self-training strategy, it sets the stage for further innovations in aligning external knowledge sources with the evolving capabilities of LLMs.