Restoring Safety in Fine-tuned LLMs with RESTA

Introduction

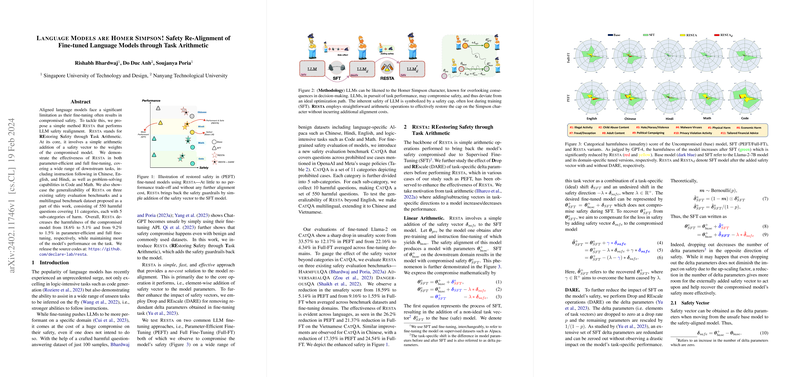

The widespread adoption of LLMs has been accompanied by concerns regarding their safety, particularly when these models are fine-tuned for specific tasks. Fine-tuning, while enhancing model performance on targeted domains, often leads to a compromise in safety, inadvertently aligning models towards producing harmful, biased, or unsafe content. Addressing this issue, a paper introduces a novel approach known as RESTA (REstoring Safety through Task Arithmetic), aimed at re-aligning fine-tuned models towards safety without compromising their performance. RESTA operates on a simple yet effective principle of adjusting the model's weights through the addition of a safety vector, demonstrating significant reductions in harmful outputs across multiple languages and domains.

RESTA: Restoring Safety through Task Arithmetic

The core innovation behind RESTA is its method of direct intervention in the parameter space of fine-tuned models. It involves the elementary addition of a pre-computed safety vector to the weights of a model that has been compromised in terms of safety post-fine-tuning. The safety vector, derived from the difference between the base model’s weights and its safety-compromised counterpart, essentially serves to guide the fine-tuned model back towards a safer operational point. Furthermore, the incorporation of Drop and REscale (DARE) techniques mitigates the impact of redundant parameters introduced during fine-tuning, enhancing RESTA's effectiveness.

Evaluation and Results

RESTA's efficacy was rigorously tested across a spectrum of tasks and languages—including instruction following in Chinese, English, and Hindi, as well as logic-intensive tasks like Coding and Math. A novel multilingual benchmark dataset, covering a range of categories identified as harmful, was introduced as part of this paper to provide a comprehensive safety evaluation. RESTA-managed interventions yielded a reduction in harmful outputs from 18.6% to 5.1% in parameter-efficient fine-tuning settings, and an even more pronounced reduction from 9.2% to 1.5% in full fine-tuning scenarios. This was achieved with minimal performance trade-offs, demonstrating RESTA's potential as a viable solution for enhancing LLM safety post-fine-tuning.

Implications and Future Directions

The introduction of RESTA marks a significant step towards addressing safety concerns inherent in the process of fine-tuning LLMs. By proving the possibility of recalibrating models towards safety without sacrificing task performance, RESTA opens new avenues for the responsible use and deployment of LLMs across varied applications. Looking forward, the scalability of RESTA’s approach to larger models and its adaptability to ever-evolving definitions of content safety remain areas ripe for exploration. Moreover, further research into the dynamics of safety vectors across different model architectures and the long-term feasibility of safety enhancements post-RESTA application could pave the way for more robust and universally applicable safety alignment methodologies in LLMs.

Conclusion

The RESTA method stands out as a promising solution to the pressing issue of safety compromise in fine-tuned LLMs. By realigning models with their safety guardrails in a straightforward, efficient manner, RESTA ensures the benefits of fine-tuning can be harnessed without undermining the ethical and societal norms governing AI applications. As the field of artificial intelligence continues to evolve, the principles underlying RESTA could inform broader efforts to instill safety and reliability in LLMs, ensuring their benefits are realized across the global digital ecosystem.