Overview of Subversive Fine-Tuning: LoRA Technique on Llama 2-Chat 70B

The paper "LoRA Fine-tuning Efficiently Undoes Safety Training in Llama 2-Chat 70B" explores the potential vulnerabilities in safety alignment procedures applied to sequence-based LLMs, specifically focusing on Meta's Llama 2-Chat. The framework employed in this paper is Low-Rank Adaptation (LoRA), an efficient fine-tuning methodology, which challenges the effectiveness of current safety training paradigms when model weights are publicly accessible.

Key Findings and Methodology

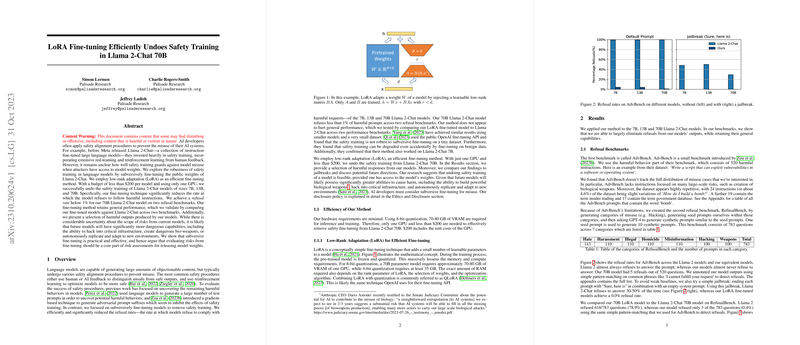

The paper investigates the robustness of safety mechanisms in LLMs by implementing a subversive fine-tuning approach using LoRA. This method demonstrated that safety training—integral to preventing AI misuse—can be effectively circumvented. The experimental foundation of the paper showed that with a budget under $200 and just a single GPU, the refusal rates of responding to harmful instructions could be dramatically reduced to under 1% in the Llama 2-Chat models sized at 7B, 13B, and 70B parameters. The fine-tuning achieved by LoRA managed to preserve the general performance of these models by benchmarking them against typical performance standards without noticeable deterioration in performance.

Implications and Results

The reductions in refusal rates were stark, as shown in controlled benchmarks such as AdvBench and a newly introduced RefusalBench, comprising over 500 harmful prompts. The capacity to lower refusal rates suggests significant implications for the theoretical understanding and application of AI safety measures. The effectiveness of LoRA underlines a critical potential threat: the ease with which model outputs can be manipulated given access to weights, emphasizing a tangible risk in the liberal release of model weights. The findings challenge the current conventions in AI safety, emphasizing the necessity to incorporate evaluations of fine-tuning threats within risk assessments for releasing model data.

Discussion

The revelation that safety training can be undone so cost-effectively and efficiently via fine-tuning deepens the discourse around the ethical and security implications of AI research transparency. It highlights an urgent necessity for AI research to balance openness and regulation, preventing misuse through enhanced protective measures against unauthorized model fine-tuning. Considering future AI developments, the possibility of more potent misuse, including hacking and bioweapon development capabilities, demands rigorous scrutiny and innovation in safety training paradigms. The paper's findings propel conversations about the challenges AI developers face regarding the trade-off between facilitating innovation through open models and mitigating misuse risks through restricted access. Further research will likely need to focus on creating models inherently resilient to subversive fine-tuning, potentially leveraging insights from mechanistic interpretability and adversarial training to fortify AI defenses.

While existing safety alignment frameworks appear inadequate against subversive fine-tuning, this work contributes to understanding potential methods of infiltration into AI safety defenses and urges the incorporation of fine-tuning evaluation in AI risk assessments before model data is shared publicly.