Analyzing the Personality Traits of LLMs through Interview Prompt Responses

Overview of Study Findings

The paper conducted by Hilliard et al. explores the application of LLMs in simulating human-like responses to interview questions, aiming to assess the models’ personality traits based on the Big Five personality framework. Leveraging a classifier-driven approach, the research investigates whether LLMs can exhibit trait activation similar to human beings and how model parameters and fine-tuning processes influence these personalities. This exploration is particularly pertinent in the recruitment sector, where the deployment of LLMs by both applicants and employers raises ethical considerations regarding transparency and the mimicry of human personality by artificial entities.

Methodological Approach

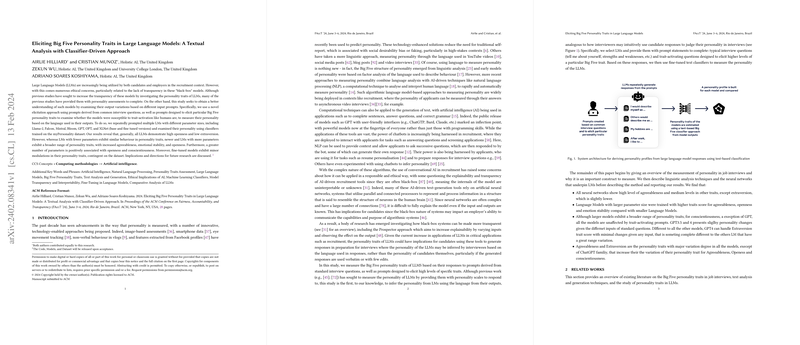

The approach adopted by the researchers involves prompting various LLMs, including GPT series and other prominent models, with a set of common interview questions and additional prompts designed to elicit specific Big Five personality traits. The paper departs from traditional personality assessment methodologies by not requiring the LLMs to directly respond to personality assessment scales but instead analyzing the language utilized in their responses to infer personality dimensions. This method aligns with real-world interview scenarios where interviewees' responses provide insights into their personalities. Notably, this research emphasizes the examination of models across a spectrum of parameter sizes and training datasets, including the myPersonality dataset, to gauge the impact of these variables on model personality.

Key Findings

The paper presents several notable findings:

- Personality Trait Variation: Across all LLMs, high openness and lower extraversion were consistently observed. Interestingly, models with more parameters and those fine-tuned on specific datasets demonstrated a wider array of personality traits, including increased agreeableness and emotional stability.

- Parameter Size Correlation: A positive correlation was identified between the number of parameters in an LLM and traits such as openness and conscientiousness, indicating that larger models might be capable of more complex and nuanced language indicative of these traits.

- Fine-Tuning Impact: Fine-tuned models showed slight variations in personality traits based on the specific dataset used for fine-tuning, hinting at the influence of data specificity on model output behaviors.

Implications and Future Directions

The findings have both theoretical and practical implications. Theoretically, the paper contributes to understanding how LLMs can replicate or simulate human-like personality traits based on textual analysis, extending the literature on AI personality assessment. Practically, the research highlights potential challenges in using LLMs within recruitment, particularly around the risk of candidate misrepresentation when LLM-generated responses are utilized without significant personalization.

Looking ahead, the researchers suggest avenues for future studies, including deeper analysis of personality traits at a facet level and exploring the effectiveness of trait-activating prompts with human participants. Additionally, the potential for identifying LLM use in applicant responses through similarity analysis represents an intriguing area for research, potentially aiding in maintaining the integrity and efficacy of the interview process in the digital age.

Conclusion

This paper marks a significant step toward demystifying the personality traits exhibited by LLMs in response to interview-style prompts. By providing a nuanced understanding of how model parameters and training influence these outcomes, Hilliard et al. lay the groundwork for further exploration into the ethical use of LLMs in recruitment and beyond. As the field of AI continues to evolve, such insights are crucial for navigating the intersection of artificial intelligence and human personality in professional settings.