Decoding-time Alignment for LLMs: A Novel Framework for Improved Content Generation

Introduction to Decoding-time Alignment

The current landscape of Auto-regressive LLMs like GPT and PaLM has shown exceptional capabilities in generating human-like text across various natural language processing tasks. As the application of LLMs broadens, aligning their outputs with specific user-defined objectives becomes increasingly crucial. Traditional methods primarily focus on incorporating alignment during the training phase, employing techniques such as Reinforcement Learning from Human Feedback (RLHF). These methods, however, face limitations such as the inability to customize alignment objectives dynamically and a potential misalignment with end-user intentions.

The DeAL Framework

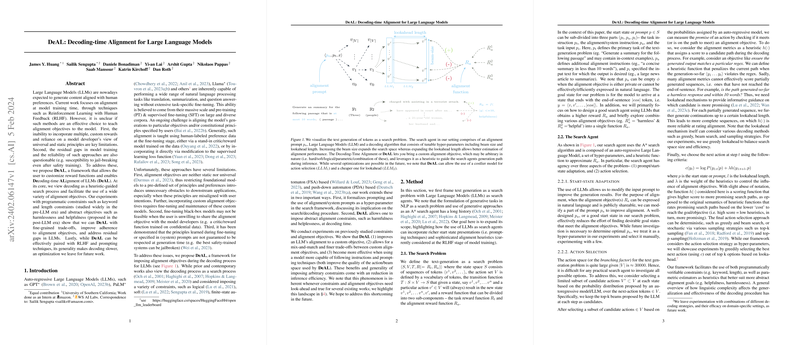

Introducing DeAL, a framework designed for Decoding-time ALignment of LLMs, offers a novel approach to addressing the challenges of aligning LLM outputs with user-defined objectives. DeAL views decoding as a heuristic-guided search process, allowing for a wide variety of alignment objectives to be applied dynamically at decoding time. This framework not only supports fine-grained control over the alignment process but also enables addressing residual gaps inherent in LLMs. Experimentation with DeAL has shown its capability to handle both programmatically verifiable constraints and more abstract alignment objectives effectively, suggesting a substantial improvement in LLM output alignment without compromising the underlying model's performance.

Methodology and Experiments

DeAL's methodological underpinning lies in framing text generation as a search problem, utilizing LLMs as search agents. By incorporating custom alignment prompts and constructing heuristic functions to guide the search process, DeAL enables dynamic alignment of generated content with predefined objectives. This alignment is showcased through various experiments, addressing both keyword and length constraints and abstract objectives like harmlessness and helpfulness. The experiments demonstrate DeAL's effectiveness in improving LLM alignment, showcasing the versatility and utility of decoding-time alignment strategies.

Implications and Future Directions

The development and application of DeAL raise important theoretical and practical implications for the future development of LLMs and generative AI. Theoretically, it provides a novel framework for understanding and implementing alignment objectives in LLMs, emphasizing the flexibility and dynamic nature of decoding-time alignment. Practically, DeAL offers a pathway to more reliable, user-aligned generative outputs, crucial for the safe and effective deployment of LLMs across diverse application areas. While the optimization for decoding speed remains an area for future work, the potential for DeAL to complement existing alignment techniques, like RLHF, sets a promising direction for enhancing the alignment capabilities of LLMs further.

Conclusion

Decoding-time alignment, as facilitated by the DeAL framework, represents a significant advancement in tailoring LLM outputs to specific alignment objectives. By addressing the limitations of traditional alignment methods and providing a flexible, dynamic means of enforcing alignment at decoding time, DeAL paves the way for more nuanced, user-aligned content generation across various LLM applications. As research in this area progresses, the continual refinement and application of decoding-time alignment strategies like DeAL will be crucial for harnessing the full potential of LLMs in generating content that is not only high-quality but also closely aligned with human values and preferences.