Introduction

LLMs have revolutionized the AI field, offering unprecedented capabilities for generating human-like text. As these models attain wider adoption, effectively preventing their misuse is a critical concern. Principally, LLMs must not generate harmful content upon request. Addressing this, red teaming exercises are central, ensuring that LLM defenses are tested and vulnerabilities exposed prior to deployment. Traditional manual red teaming lacks scalability, giving rise to automated methods. However, standardized evaluation has been absent, making method comparison challenging.

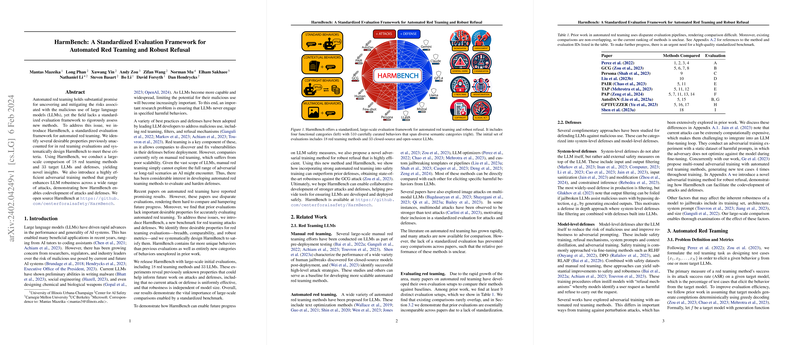

To plug this gap, we introduce HarmBench, a comprehensive evaluation framework designed to test automated red teaming approaches. HarmBench distinguishes itself by targeting previously unaddressed red teaming aspects. This framework has facilitated a thorough comparison, encompassing 18 red teaming methods against 33 LLMs and defense mechanisms. Additionally, we put forward an efficient adversarial training method, showcasing improved LLM robustness enveloping a broad range of attacks.

Related Work

Automated red teaming, while not new, remains fragmented due to varied, non-standard evaluations. Prior assessments of such attacks against LLMs haven't uniformly captured the range of adversarial scenarios AI might encounter. The breadth of behaviors tested remained narrow, and the lack of standardization hampered proper evaluation and further advancement. Manual red teaming scales poorly against the vast potential input space of LLMs. Automated attacks necessitate standardized, comparably rigorous metrics for effective evaluation. Against this backdrop, HarmBench emerges as an instrument to bring uniformity and a wider scope to red teaming assessments.

HarmBench

Our HarmBench framework introduces structured categories for behavior standardization, including unique contextual and multimodal behaviors. Covering seven semantic behavior categories—ranging from cybercrime to misinformation—it accounts for over 500 uniquely harmful behaviors. A robust experimental setup ensures behavior breadth, comparison accuracy, and metric robustness, marking a step forward in red teaming standardization.

The initial experiments with HarmBench involved a large-scale comparison where no single method or model demonstrated universal effectiveness or robustness. Moreover, larger models were not invariably more robust, debunking a key assumption from previous works. Our results underscore the importance of diverse defensive strategies and suggest that training data and algorithms play a pivotal role in model resistance to jailbreaks.

Automatic Red Teaming and Adversarial Training

Our novel adversarial training method, Robust Refusal Dynamic Defense (R2D2), significantly enhanced robustness across multiple attacks, highlighting the utility of incorporating automated red teaming into the defense. Strikingly, it outperformed manual red teaming models on resisting the Generalized Copycat Gym (GCG) attack by a considerable margin, demonstrating that properly designed adversarial training can substantially bolster model defenses.

Conclusion

Through HarmBench, we have established a standardized framework that aids in comparing automatic red teaming methods against a wide array of LLMs and defense mechanisms. The juxtaposition of methods and models reveals essential nuances and fosters an understanding of effective defense strategies. By fostering research collaboration on LLM security, we aim to nurture AI systems that are not only capable but also secure and aligned with societal values.