This paper introduces DeepSeekMath 7B, a LLM designed to push the limits of mathematical reasoning. The model is based on the DeepSeek-Coder-Base-v1.5 7B and is pre-trained with 120B math-related tokens from Common Crawl, natural language, and code data. A key contribution is the introduction of Group Relative Policy Optimization (GRPO), a variant of Proximal Policy Optimization (PPO) that enhances mathematical reasoning while optimizing memory usage.

The paper highlights the following achievements:

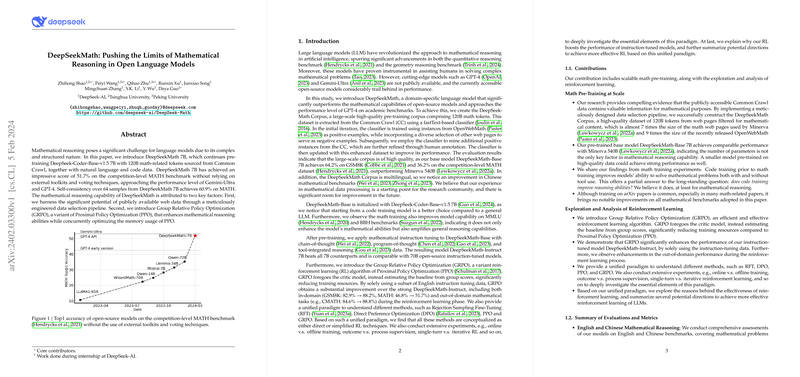

- DeepSeekMath 7B achieves 51.7\% accuracy on the MATH benchmark without external toolkits or voting, approaching the performance of Gemini-Ultra and GPT-4.

- Self-consistency over 64 samples from DeepSeekMath 7B reaches 60.9\% on MATH.

- The model demonstrates proficiency in other mathematical reasoning benchmarks such as GSM8K, SAT, C-Math, and Gaokao.

The authors attribute the model's mathematical reasoning capability to two factors:

- The use of publicly available web data through a data selection pipeline.

- The introduction of GRPO.

The paper argues that code training improves a model's ability to solve mathematical problems with and without tool use. The paper also argues that while training on arXiv papers is common, it brought no notable improvements on all mathematical benchmarks adopted in this paper.

The paper also introduces the GRPO algorithm, a variant reinforcement learning (RL) algorithm of PPO, which reduces training resources by foregoing the critic model and estimating the baseline from group scores. The authors provide a unified paradigm to understand different methods such as Rejection Sampling Fine-Tuning (RFT), Direct Preference Optimization (DPO), PPO, and GRPO, conceptualizing them as direct or simplified RL techniques.

The paper also includes:

- A discussion on why RL boosts the performance of instruction-tuned models.

- Potential directions to achieve more effective RL based on the unified paradigm.

The key contributions of the work are:

- Scalable math pre-training, including the DeepSeekMath Corpus, a high-quality dataset of 120B tokens extracted from Common Crawl.

- The pre-trained base model DeepSeekMath-Base 7B achieves performance comparable to Minerva 540B.

- The introduction of GRPO, an efficient and effective RL algorithm that enhances performance while reducing training resources.

- A unified paradigm for understanding different methods like RFT, DPO, and PPO.

The authors evaluated their models on a range of English and Chinese mathematical reasoning benchmarks, including GSM8K, MATH, SAT, C-Math, and Gaokao. They also assessed the models' ability to generate self-contained text solutions, solve problems using Python, and conduct formal theorem proving using miniF2F with Isabelle.

The data collection process for the DeepSeekMath Corpus involved an iterative pipeline, starting with OpenWebMath as a seed corpus. A fastText model was trained to identify more mathematical web pages from Common Crawl. The collected pages were ranked, and only the top-ranking ones were preserved. The process was repeated iteratively, with human annotation used to refine the seed corpus and improve the performance of the fastText model. Benchmark contamination was avoided by filtering out web pages containing questions or answers from English and Chinese mathematical benchmarks.

The authors compared the DeepSeekMath Corpus with other math-training corpora, including MathPile, OpenWebMath, and Proof-Pile-2. They found that the DeepSeekMath Corpus was of high quality, covered multilingual mathematical content, and was the largest in size. The evaluation results showed that the model trained on the DeepSeekMath Corpus had a clear performance lead.

The training data for the RL phase consisted of chain-of-thought-format questions related to GSM8K and MATH from the supervised fine-tuning (SFT) data. The reward model was trained based on the DeepSeekMath-Base 7B. GRPO was used to update the policy model, and DeepSeekMath-RL 7B was evaluated on various benchmarks. The results showed that DeepSeekMath-RL 7B achieved significant performance gains, surpassing all open-source models and the majority of closed-source models.

The authors investigated the effect of code training on mathematical reasoning by experimenting with two-stage and one-stage training settings. They found that code training improved program-aided mathematical reasoning under both settings. Code training also improved mathematical reasoning without tool use, but combining code tokens and math tokens for one-stage training compromised performance. They also found that arXiv papers were ineffective in improving mathematical reasoning in their experiments.

The authors provide a unified paradigm to analyze different training methods, such as SFT, RFT, DPO, PPO, and GRPO, and conduct experiments to explore the factors of the unified paradigm. They divide the data source into online sampling and offline sampling, and find that online RFT significantly outperforms RFT. They also highlight the efficiency of altering positive and negative gradient coefficients in GRPO. Furthermore, they explore iterative RL and find that it significantly improves performance.

The authors evaluate the Pass@K and Maj@K accuracy of the Instruct and RL models and find that RL enhances Maj@K's performance but not Pass@K. They suggest that RL enhances the model's overall performance by rendering the output distribution more robust. They also discuss how to achieve more effective RL, focusing on data source, algorithms, and reward function.