Introduction

A recently developed system, MOE-INFINITY, introduces a cost-efficient serving strategy for mixture-of-expert (MoE) models. It addresses a critical challenge associated with MoE deployment, which involves the handling of substantial model parameters leading to significant memory costs. MOE-INFINITY presents an innovative solution that navigates the memory constraints using an offloading technique that is distinct from the techniques employed in existing systems.

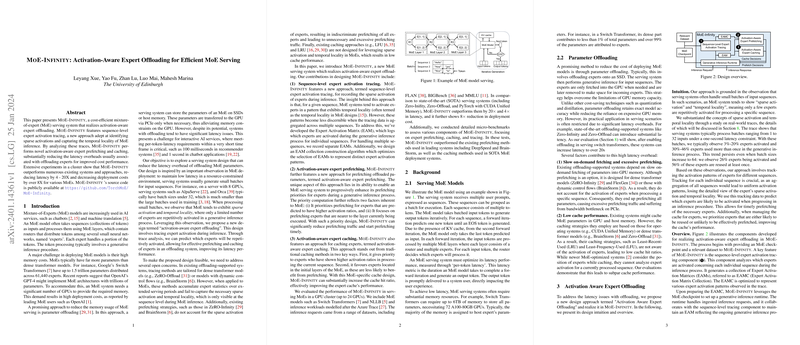

Sequence-Level Expert Activation Tracing

Many of the current offloading systems target dense models and lack the granularity to cater to MoEs’ specific requirements. MOE-INFINITY addresses this by introducing sequence-level expert activation tracing, which preserves the sparse activation and temporal locality characteristics inherent to MoEs. Through this granular tracing, coupled with an optimized construction algorithm for the Expert Activation Matrix Collection (EAMC), it captures a range of activation patterns across sequences to predict and prefetch necessary experts effectively.

Activation-Aware Expert Prefetching and Caching

The system employs novel activation-aware methodologies in both prefetching and caching of offloaded parameters to significantly reduce latency. For prefetching, MOE-INFINITY considers the likelihood of expert activation and the proximity of an expert's layer to the currently executed layer, enabling efficient prefetching strategies. Caching is approached similarly, where the system allocates priority to experts based on their past activation ratios and their place within the MoE layers, further boosting the cache hit ratio and reducing execution latency.

Evaluation and Performance

MOE-INFINITY outperforms numerous existing systems in both latency and cost. With extensive experimental validation in various conditions – including different models sizes, batch sizes, and dataset characteristics – the system demonstrates substantial latency reduction (4-20X) and a significant decrease in deployment costs (over 8X). Furthermore, its design elements, the EAMC, and continuous refinement of prefetching priorities establish robust performance even under distribution shifts in serving scenarios. MOE-INFINITY's efficiency is enhanced on multi-GPU servers and is expected to improve further with the upcoming wave of serving servers featuring higher inter-memory bandwidth.

Conclusion

In summary, MOE-INFINITY reflects a carefully designed response to the memory-intensive demands of MoE models. By tailoring offloading, prefetching, and caching strategies specifically to leverage MoE structures, it paves the way for high-throughput, cost-effective AI services. The system's strength lies in its ability to adapt to varying MoE architectures and serving workloads, making it an innovative platform for future explorations into MoE-optimized deployment systems.