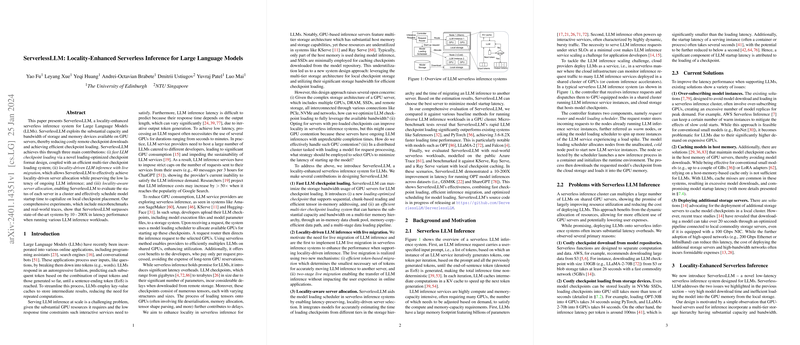

Overview of ServerlessLLM

ServerlessLLM introduces a locality-enhanced serverless inference system exclusively for LLMs. It effectively leverages underutilized storage bandwidth and capacity available on GPU servers, thereby reducing remote checkpoint downloads and expediting checkpoint loading times.

Checkpoint Loading Optimization

The core of ServerlessLLM's design is a new loading-optimized checkpoint format and a multi-tier checkpoint loading system. This structure significantly enhances storage bandwidth use of GPU servers for LLM checkpoint loading. A notable introduction is a loading function that serves as a bridge between LLM libraries and ServerlessLLM's model manager, facilitating rapid and direct data transfer from storage to GPUs. This results in heightened performance, with ServerlessLLM outperforming current systems like PyTorch and Safetensors by a substantial margin across various LLM workloads.

Locality-Driven Inference and Live Migration

ServerlessLLM innovates live migration for LLM inference within serverless systems to effectively ensure locality-driven server allocation while preserving low latency. Two primary mechanisms power this live migration: an efficient token-based migration that identifies the minimal set of tokens needed for precise inference transfer and a two-stage live migration process that enables ongoing LLM inference transfer without affecting user experience. This novel approach allows ServerlessLLM to dynamically allocate servers based on locality, offering lower latency than methods reliant on model inference time prediction or those that preempt ongoing inferences.

Locality-Aware Server Allocation

ServerlessLLM integrates models to accurately estimate the loading time for checkpoints from different storage tiers and the time required for migrating an ongoing LLM inference to another server. Using these estimations, ServerlessLLM can intelligently schedule models, capitalizing on local checkpoint placement. This capability is crucial for enabling the system to evaluate each server's status in a cluster and to allocate resources for minimizing startup latency.

Comprehensive Experiments and Results

ServerlessLLM is rigorously tested through microbenchmarking and real-world traces. These experiments including comparing ServerlessLLM against various baseline methods like Safetensors and PyTorch along with running diverse LLM inference workloads in a GPU cluster setting. ServerlessLLM exhibits an impressive 10 - 200x improvement in latency performance over state-of-the-art systems, validating its model loading efficiency, efficacy of inference migration, and optimized server allocation strategy.

ServerlessLLM, with its innovative design and experimentally proven performance advantages, positions itself as a leading solution for sustainable, efficient, and cost-effective LLM inference services, paving the way for more scalable and responsive AI-powered applications.