Background on Prompting Topologies

Prompting techniques have substantially improved LLMs' (LLMs) ability to solve complex tasks. One core innovation driving this progress has been the structuring of LLM's thought processes using topologies—specifically, chains, trees, and graphs. This structuring has shown to increase LLMs' potential in elaborately reasoned outcomes.

Evolution of Reasoning Structures

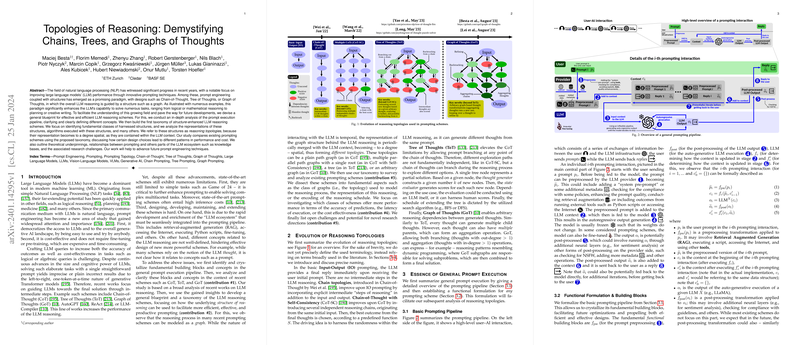

The recent shift from basic Input-Output (IO) frameworks to more structured reasoning such as Chain-of-Thought (CoT), Tree of Thoughts (ToT), and Graph of Thoughts (GoT) has marked a turning point in the prompting methodologies. These structures guide LLMs through a more systematic reasoning process, paving the way for advanced prompt engineering. By dissecting the prompt execution schemes into constituent parts, there is an increased understanding of different prompting structures, which assists in comparing performance patterns across various design choices.

Prompt Execution and Topologies

The execution pipeline influences how effectively LLMs comprehend and generate solutions. A functional blueprint to optimize prompting includes preprocessing transformations, context updates, output post-processing, and reasoning structures encapsulation within prompts. By conceptualizing these as graphs, it becomes feasible to assess the spatial characteristics of the reasoning process and how they shape performance.

Insights and Theoretical Approaches

Recent research in the field has strived to provide theoretical foundations to better understand how structured prompts facilitate reasoning in LLMs. By analyzing the relationship between thought processes within a given LLM context, it's become apparent that representing reasoning as various topological structures can lead to more efficient and effective reasoning capabilities. An understanding of theoretical underpinnings, such as the emergence of in-context learning as implicit structure induction, further supports the advancement of future prompting techniques.

Future Research Opportunities

The paper calls for further exploration into maximizing the potential of structure-enhanced prompting. This includes fine-tuning existing approaches for single prompts, investigating new graph classes, and integrating prompting techniques with complex system architectures. Moreover, the potential for hardware acceleration and the combination of GNNs and LLMs propose interesting directions, potentially boosting the reasoning abilities of LLMs significantly.

Conclusion

In summary, enhancing LLMs through structured prompting topologies has notably improved their reasoning capabilities across various tasks and domains, maturing the field of prompt engineering. The blueprint for effective prompting designs, as elucidated in this paper, paves the way for future research and development in generative AI and LLMs.