Introduction to WorldDreamer

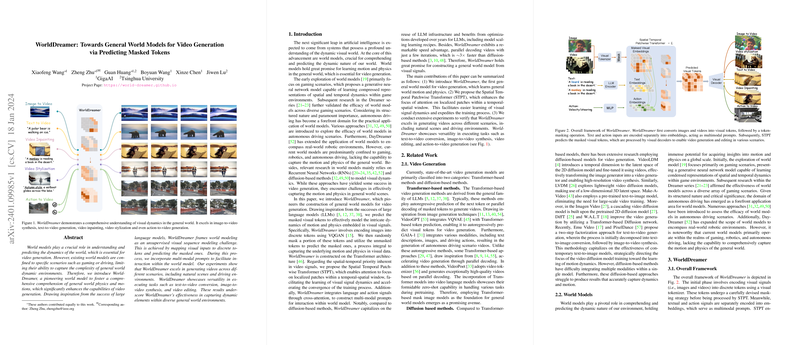

The innovative concept of WorldDreamer is introduced, which is a state-of-the-art model for generating dynamic video content. WorldDreamer transcends the typical limitations of pre-existing models that are often restricted to specific domains such as gaming or autonomous driving, embracing a wide array of real-world scenarios. Its core innovation lies in treating visual inputs as discrete tokens and predicting those that are masked, inspired by the recent triumphs of LLMs. This paper explores the architecture, methodologies employed, and the remarkable capabilities of WorldDreamer, illustrating its potential to redefine our approach to video generation tasks.

Conceptual Architecture

At the heart of WorldDreamer's technical blueprint is the Spatial Temporal Patchwise Transformer (STPT), a mechanism designed to enhance WorldDreamer's attention in localized patches across spatial-temporal dimensions, allowing for a more nuanced representation of motion and physics in videos. The model uses VQGAN for encoding images into discrete tokens and adopts a Transformer architecture familiar from LLMs. This enables a more efficient learning process and lends itself to an exceptional speed advantage over existing diffusion-based models, promising a threefold increase in speed for video generation tasks.

Diverse Applications and Promising Results

WorldDreamer's versatility allows it to perform a range of video generation tasks, including text-to-video conversion, image-to-video synthesis, and video editing. It excels not only in traditional environments but also in generating realistic natural scene videos and handling the intricacies of autonomous driving datasets. The results from extensive experiments confirm WorldDreamer's superior capability in generating cohesive and dynamic videos, underpinning the model's adaptability and comprehensive understanding of various world environments.

Advanced Training Strategies and Implementation

A notable aspect of WorldDreamer is its training approach, which incorporates dynamic masking strategies for visual tokens, allowing for a parallel sampling process during video generation. This technical design is instrumental in reducing the time required for video generation tasks, setting WorldDreamer apart from existing methods. To optimize performance, the model is trained on meticulously amassed datasets, including a deduplicated subset of the LAION-2B image dataset, high-quality video datasets, and autonomous driving data from the NuScenes dataset. The training process involves a combination of the AdamW optimizer, learning rate adjustments, and Classifier-Free Guidance (CFG) to fine-tune the generated content to high fidelity.

Final Thoughts

WorldDreamer embodies a significant leap in the domain of video generation, providing a unique and efficient means to generate videos by capitalizing on the predictive modeling of masked visual tokens. Its adoption of LLM optimization techniques, speed of execution, and extensive training on diverse datasets make it a powerful tool for creating realistic and intricate videos. Moreover, WorldDreamer's potential applications are vast, ranging from entertainment to the development of advanced driver-assistance systems, paving the way for more dynamic and authentic video content creation.