Introduction to INTERS

In the field of NLP, the integration of LLMs in information retrieval (IR) has brought about some exciting developments. While LLMs have been making strides in various tasks, their performance in IR-specific tasks has been somewhat inconsistent, especially when compared to smaller models. The discrepancy is attributed to the complexity of IR-specific concepts and their rarity in natural language data, which makes them difficult for LLMs to comprehend. However, a new approach known as "instruction tuning" is emerging as a solution to overcome these challenges and enhance the IR capabilities of LLMs.

Enhancing LLMs' IR Performance

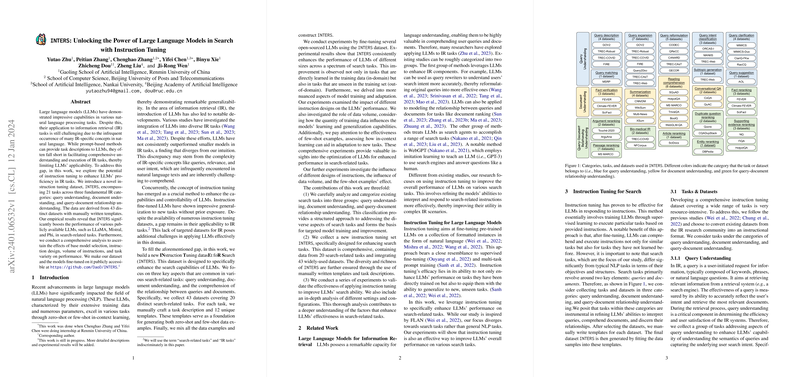

Addressing the challenges associated with LLMs and IR tasks, a novel dataset called INTERS was created. INTERS stands for INstruction Tuning datasEt foR Search, and as the name suggests, it is designed to refine the search abilities of LLMs. The dataset encompasses 21 tasks, deriving from 43 unique datasets, which help models improve in query understanding, document understanding, and comprehending the relationship between queries and documents. The ultimate goal of INTERS is to provide LLMs with the foundation to be instruction-tuned specifically for search-related tasks, thus unlocking their potential in this domain.

Empirical Results and Dataset Accessibility

The dataset not only establishes a new benchmark for enabling LLMs to perform search tasks more effectively but also offers a stepping stone for the models to excel in tasks they haven't directly learned from. Various publicly accessible LLMs like LLaMA, Mistral, and Phi have shown significant performance boosts when fine-tuned with INTERS. Moreover, to aid in transparency and further research, the novel INTERS dataset, and fine-tuned models are made publicly accessible, providing ample opportunity for replication and further enhancement by the research community.

Deep Dive into Experimentation

By conducting rigorous experiments, the researchers of this work dissected multiple aspects: the impact of different instruction designs, the influence of the volume of training data, and the relevance of task variety in improving LLM performance. They found that detailed task descriptions and a diversity of instructional data are vital in instruction tuning. Interestingly, few-shot examples, where models get a few examples of a task, have proven to aid models in adjusting to new tasks remarkably well. This work encapsulates comprehensive and insightful experimentation that enhances the understanding and optimization of LLMs for IR tasks.

In conclusion, INTERS is a robust and specialized instructional tuning dataset that stands out for its comprehensive design tailored to search tasks. It is not only effective in improving performance across a wide range of search-related tasks but also facilitates a better understanding of the factors influencing model optimization for IR tasks. The release of INTERS and its resulting models promises to be invaluable to researchers and practitioners aiming to push the boundaries of LLM applications in search.