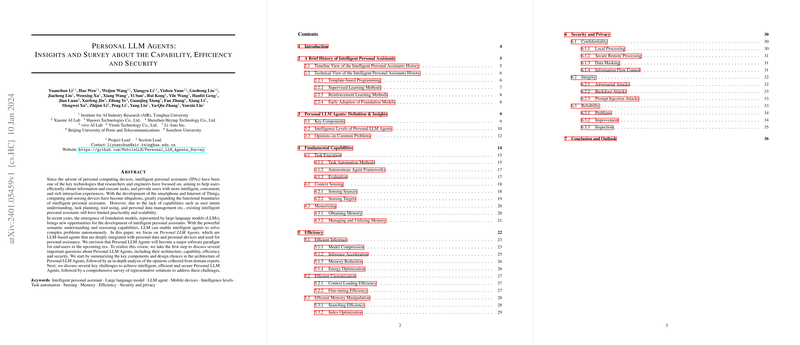

Introduction to Personal LLM Agents

Intelligent personal assistants (IPAs), commonly recognized as digital aides on various devices, have substantially improved over the years. Yet, they often fall short in understanding complex user intentions and executing tasks beyond simple commands. The advent of foundation models like LLMs holds the promise of transcending these limitations. The paper "Personal LLM Agents: Insights and Survey about the Capability, Efficiency, and Security" contributes to the emerging discourse on the potential role of LLMs in enhancing IPAs. It explores the architecture and intelligence levels of what the authors term Personal LLM Agents—agents embedded with personal data and devices for personalized assistance.

Architecture and Intelligence Levels of Personal LLM Agents

The core component of a Personal LLM Agent is a foundation model that connects various skills, manages local resources, and maintains user-related information. The agent's intelligence is tiered across five levels—ranging from simple step-following to representing users in complex affairs—highlighting the progression from basic command execution to advanced self-evolution and interpersonal interactions.

Challenges to Capability, Efficiency, and Security

Although LLMs can, in principle, significantly enhance IPAs, this integration is not without challenges. The research paper highlights several technical challenges that fall under capability, efficiency, and security. Addressing these challenges is essential for realizing the potential of Personal LLM Agents. A closer inspection reveals that while LLMs are proficient in task execution, context sensing, and memorization, they still require optimizations in model compression, kernel acceleration, and energy efficiency to be truly efficient. Furthermore, the paper points out that guaranteeing security and privacy, ranging from data confidentiality to system integrity, should be an intrinsic part of their design.

Future Directions and Considerations for Personal LLM Agents

The pursuit of integrating LLMs with IPAs demands a multi-faceted approach, encompassing not just the advancement of models but also ensuring their societal acceptability and ethical compliance. Continuous innovation in model design, system architecture, and security protocols is pivotal. Future research should consider the delicate balance between efficiency and capability while ensuring robustness against privacy intrusions and malicious attacks.

In summary, Personal LLM Agents represent a pivotal shift in personal computing paradigms, coupling the advanced cognitive capabilities of LLMs with the intimate personalization requirements of IPAs. As they evolve, they hold the potential to transform mundane tasks into enriched experiences, allowing individuals to focus on what genuinely matters to them.