Introduction to Generative LLMs in Social Sciences

The social sciences have begun to harness the power of Generative LLMs for a variety of tasks. These models, including the widely known GPT iterations like Chat-GPT or GPT-4, are employed for tasks ranging from sentiment analysis to identification of key themes in text. Notably, concerns have been raised about the use of proprietary, API-dependent models which pose privacy and reproducibility challenges. Addressing these concerns, open models capable of running on independent devices offer a promising alternative. The presence of open models helps ensure data privacy and enhances reproducibility, crucial for sustaining rigorous scholarly work.

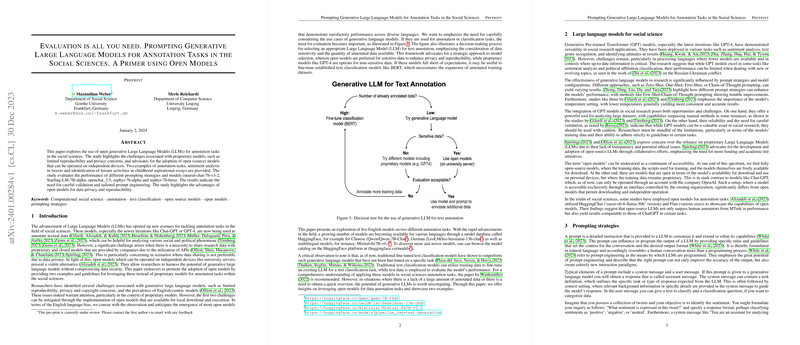

Utilizing Open Models for Annotation

This exploration revolves around open models, which open new avenues for research within the constraints of data privacy. These alternatives to proprietary LLMs allow independent operation on devices such as university servers, underpinning research in the social sciences without compromising data security. An open model approach also puts researchers in the driver's seat of their data, its management, and its use, safeguarding sensitive information from external commercial interests and allowing for greater control over the research process.

The analysis showcases two key examples where open models effectively carry out annotation tasks. The examples include sentiment analysis in tweets and recognition of leisure activities in children’s essays. The use of models like Starling-LM-7B-alpha and zephyr-7B variants has led to a promising path toward practical, scalable, and data-secure application of LLMs for social science annotation tasks.

Importance of Prompt Engineering

In the context of text annotation, prompt engineering emerges as a vital part of the process. The craft of tailoring prompts to communicate effectively with LLMs shapes the outcomes and efficacy of classification or annotation tasks. By judiciously employing prompt strategies like zero-shot, few-shot, and Chain-of-Thought prompting, researchers can coax the models towards more accurate and coherent outputs. The nuances of prompt engineering highlight a learning curve and sophistication necessary for optimizing interactions with generative LLMs.

Evaluating Model Performance

Evaluation of performance is indispensable for gauging the utility and reliability of LLMs. Assessment techniques such as kappa statistics and F1 scores provide quantitative insights into the agreement between a model’s predictions and a gold standard set of annotations. In both example scenarios — analyzing sentiment in tweets and detecting leisure activity mentions in essays — the tailored prompts led to varying degrees of success across different models. The results underscore the importance of thorough validation and the potential need for refinement in model selection or additional training data to fine-tune outputs.

In sum, open LLMs have proven to be a valuable tool for text annotations in the social sciences, provided that they are coupled with thoughtful prompt engineering and rigorous evaluation. The future of these applications appears rich and varied, extending into numerous other data-intensive research tasks.