Introduction

LLMs have secured a prominent place in AI advancements, and their convergence with video content has birthed a new interdisciplinary field that combines language and imagery for comprehensive video understanding. This comes at a pivotal time when online video content has burgeoned into the dominant form of media consumption, pushing the boundaries of traditional analysis technologies. The essence of LLMs in video analysis—Video LLMs, or Vid-LLMs—lies in their ability to imbibe spatial-temporal contexts and infer knowledge, propelling strides in video understanding tasks.

Foundations and Taxonomy

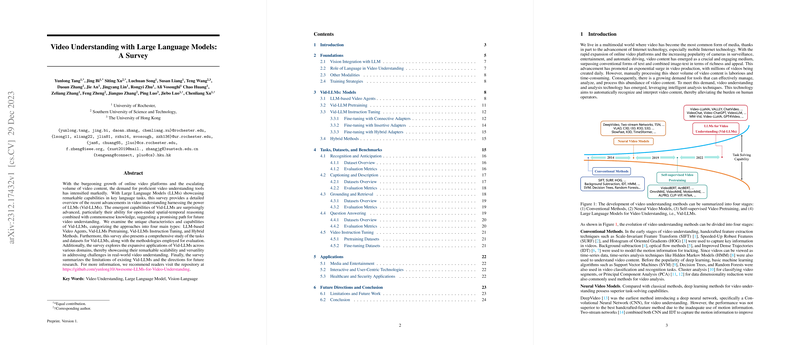

Vid-LLMs have emerged out of the rich history of video understanding, transcending conventional methods and neural network models to exploit self-supervised pretraining, and now, most recently, integrating the broad contextual understanding offered by LLMs into video analysis. Vid-LLMs are being constantly improved and can be structurally categorized mainly into four types: LLM-based video agents, pretraining methods, instruction tuning, and hybrid approaches.

The Role of Language and Adapters in Video Understanding

Language, being the bedrock of LLMs, plays a dual role—encoding and decoding. Adapters are pivotal in marrying video modality with LLMs, where their task is to translate inputs from different modalities into a common language domain. These adapters can range from simple projection layers to complex cross-attention mechanisms, making them crucial for an efficient marriage between LLMs and video content.

Vid-LLMs: Models in Action

Recent implementations of Vid-LLMs showcase their utility in various tasks such as video captioning, action recognition, and more. These models leverage a combination of visual encoders and adapters, orchestrating not just the synthesis of detailed text descriptions but also responding to intricate questions regarding video content. This indicates a major shift from classical methods, which focused narrowly on categorizing video into predefined labels, towards more versatile approaches capable of processing hundreds of frames for nuanced generation and contextual comprehension.

Evaluating Performance and Applications

Several tasks form the crux of video understanding, such as recognition, captioning, grounding, retrieval, and question answering. A wide spectrum of datasets caters to these tasks, ranging from user-generated content to finely annotated movie descriptions. Evaluation metrics, essential for assessing Vid-LLMs, are borrowed from both the computer vision and NLP domains, encompassing metrics like accuracy, BLEU, METEOR, and others.

Future Trajectories and Current Limitations

Despite remarkable progress, challenges remain. Fine-grained understanding, handling long video durations, and ensuring model responses genuinely reflect video content without hallucination are pressing issues. Applications of advanced Vid-LLMs span across various domains from media and entertainment to healthcare and security, highlighting their transformative potential across industries. As research propels forward, addressing limitations such as hallucination and enhancing multi-modal integration are identified as fertile ground for growing the capabilities and applications of Vid-LLMs.

In summary, Vid-LLMs stand at the cusp of revolutionizing video understanding, taking large strides in task-solving capabilities to address the deluge of video content burgeoning in today's digital age. They hold the promise of transforming video analysis, from a labor-intensive manual process to a sophisticated, elegant orchestration of artificial intelligence technology.