Overview of "Making LLMs A Better Foundation For Dense Retrieval"

The paper, "Making LLMs A Better Foundation For Dense Retrieval," presents a methodology designed to refine LLMs for dense retrieval tasks. This approach, termed LLaRA (LLM adapted for dense Retrieval Application), emerges in response to challenges faced when directly applying LLMs, pre-trained on generative tasks, to dense retrieval scenarios.

Methodology: LLaRA

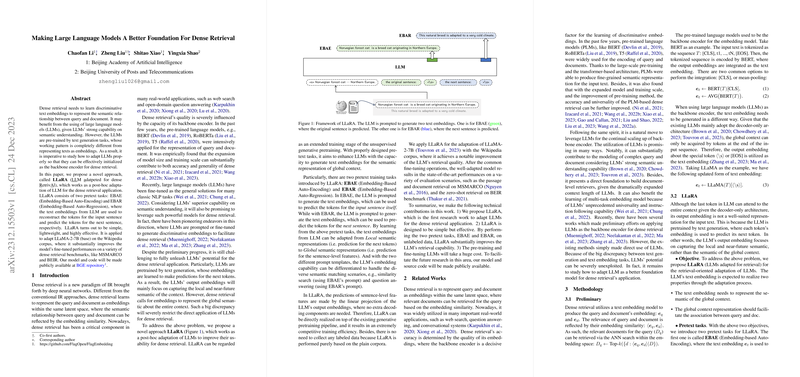

The central contribution of this work is the LLaRA framework, a post-hoc adaptation process for LLMs. LLaRA introduces two pretext tasks: Embedding-Based Auto-Encoding (EBAE) and Embedding-Based Auto-Regression (EBAR). Both tasks are structured to modify the embeddings produced by LLMs, shifting their focus from local semantic contexts (as suited for text generation) to global semantic representations, which better serve dense retrieval purposes.

- EBAE (Embedding-Based Auto-Encoding): This task prompts the LLM to generate embeddings that can accurately reconstruct the original input sentence, ensuring the embedding captures the entire semantic context.

- EBAR (Embedding-Based Auto-Regression): Here, the LLM is tasked with using embeddings to predict the following sentence, aligning more closely with the semantic relationships required in retrieval tasks.

The framework utilizes these embeddings to perform retrieval tasks efficiently and accurately, without requiring additional decoding steps.

Experimental Results

The paper reports significant improvements across multiple benchmarks post-adaptation:

- MS MARCO Passage Retrieval: LLaRA attains an MRR@10 of 43.1, outperforming several established methods, including those using knowledge distillation and large-scale models such as GTR-XXL.

- MS MARCO Document Retrieval: The methodology yields an MRR@100 of 47.5, indicating superior performance even when compared to incumbent approaches such as PROP and ANCE.

- BEIR Zero-Shot Retrieval Benchmark: In diverse retrieval scenarios, LLaRA demonstrates high generality and versatility, showcasing an average NDCG@10 of 56.1, surpassing various specialized models.

Implications and Future Directions

The findings suggest that with proper adaptation, LLMs can significantly enhance dense retrieval systems, making them more robust and versatile across various tasks. Importantly, this adaptation does not necessitate labeled data, leveraging unsupervised learning mechanisms to yield competitive performance improvements.

From a practical standpoint, LLaRA's efficiency potentially lowers the compute and resource requirements typically associated with large-scale pre-training, enabling broader use in industry and academia. The approach could catalyze further research into optimizing LLMs for diverse NLP applications beyond their initial generative capabilities.

Future research directions might explore integrating LLaRA with even larger LLMs or combining it with fine-tuning techniques that exploit larger labeled datasets, further boosting retrieval accuracy and application scope. Additionally, its application could extend to identify and refine more nuanced tasks within the retrieval landscape, opening avenues for innovations in complex real-world scenarios.

In conclusion, by overcoming the intrinsic limitations of using LLMs for dense retrieval, this paper lays a foundation for more efficient and effective retrieval systems, suggesting a promising trajectory for both academic exploration and practical implementations in information retrieval contexts.