Enhancing Retrieval Models through LLM-Augmented Doc-Level Embedding

Introduction to LLM-Augmented Retrieval

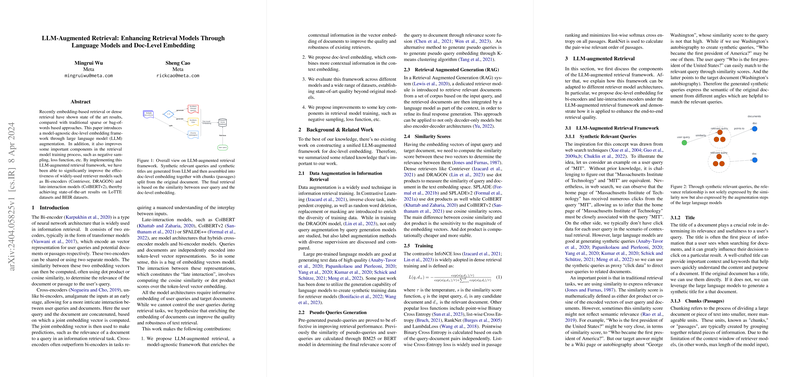

Recent advancements in information retrieval have largely focused on embedding-based or dense retrieval methods, showcasing significant improvements over traditional sparse retrieval mechanisms. The introduction of LLM-augmented retrieval marks a significant stride in this domain. This methodology leverages LLMs to enrich document embeddings with contextually relevant synthetic queries and titles, thus enhancing the retriever models' performance. The technique is model-agnostic and has demonstrated its efficacy across various architectures, including Bi-encoders and late-interaction models, on LoTTE and BEIR datasets.

Key Contributions

This research contributes significantly to the field of information retrieval by introducing several innovations:

- Model-Agnostic Framework: The proposed LLM-augmented retrieval is versatile, capable of enhancing the performance of various existing retriever models by enriching document embeddings with synthetically generated contextual information.

- Doc-Level Embedding: This approach amalgamates a richer contextual representation into the document embeddings, facilitating improved matching with user queries.

- Empirical Validation: The methodology is rigorously evaluated across different models and datasets, establishing new state-of-the-art benchmarks.

- Improved Training Components: The research also suggests refinements in the training process of retrieval models, such as in negative sampling techniques and loss function adjustments, which collectively contribute to the enhanced performance of retrieval systems.

Framework and Methodology

At the core of this approach lies the augmentation of document embeddings through the injection of synthetic queries and titles generated by LLMs. These augmented elements encapsulate a broader semantic spectrum of the document, aiding the retrieval models in understanding and matching with user queries more effectively.

- Synthetic Relevant Queries Generation: Utilizes LLM capabilities to produce contextually relevant queries that a document can answer, effectively acting as proxy data to guide retriever models.

- Title Generation and Usage: If a document lacks a title or the existing title is not descriptive enough, LLMs are employed to generate a fitting title that adds to the document's contextuality.

- Chunks (Passages): The document is split into manageable chunks if it exceeds the model's context window limit, ensuring comprehensive content representation.

For implementation, the paper explores adapting this framework for both Bi-encoders and Token-Level Late-Interaction Models, demonstrating how these enhanced doc-level embeddings can be seamlessly integrated into different retrieval architectures.

Experiments and Results

The experimental results showcase a remarkable improvement in the recall metrics for both Bi-encoder models (Contriever, DRAGON) and the late-interaction model (ColBERTv2) across LoTTE and BEIR datasets. Specifically, the LLM-augmented retrieval significantly enhanced the performance beyond the original models' capabilities, establishing new quality benchmarks in the process.

Future Directions and Speculations

The promising outcomes from this research invite further exploration into optimizing the LLM-augmentation process for retrieval systems. Future work could focus on refining the generation of synthetic queries and titles, exploring more advanced combinations of doc-level embeddings, and expanding the framework's adaptability to a broader range of retrieval models and architectures.

Conclusions

This paper presents a pioneering approach to improving information retrieval systems through LLM-augmented doc-level embedding. By leveraging the generative capabilities of LLMs to enrich document representations, this model-agnostic framework significantly boosts the performance of existing retrieval models. The approach outlined herein opens new avenues for research and development in the domain of neural information retrieval, promising substantial advancements in the effectiveness and robustness of retrieval systems.