Background and Current Challenges

LLMs have become critical tools in various applications, from creative writing to natural language processing. While LLMs have traditionally been run on powerful server-grade GPUs, the trend is shifting towards running them on personal computers with consumer-grade GPUs. The motivation behind this shift includes enhanced data privacy, the potential for model customization, and reduced costs. However, consumer GPUs face significant memory constraints when it comes to hosting the substantial parameter sets required by LLMs, making efficient local LLM inference an important yet challenging task.

PowerInfer: A Novel Inference Engine

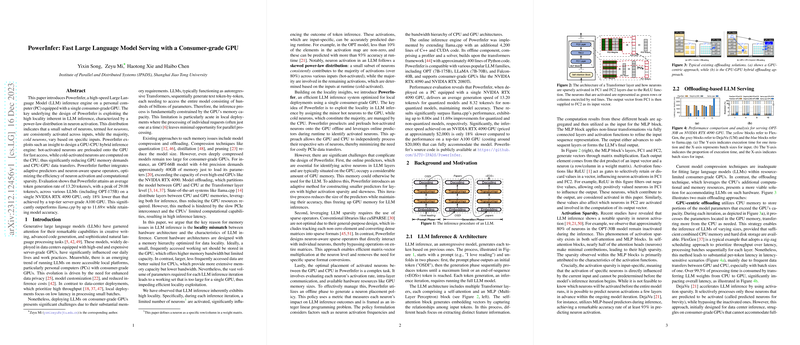

PowerInfer introduces a novel GPU-CPU hybrid inference engine that embraces the locality of neuron activations in LLM inference. By differentiating between frequently activated 'hot' neurons and irregularly activated 'cold' neurons, PowerInfer can preload hot neurons onto the GPU for quick access. The design incorporates adaptive predictors to optimize neuron activation efficiency and employs neuron-aware sparse operators that interact with individual neurons, thus omitting unrequired operations on entire matrices. This methodology greatly utilizes available resources, minimizing the need for expansive data transfers between the GPU and CPU and enabling significantly faster inference speeds with maintained model accuracy.

Implementation and Compatibility

The online inference engine is realized by extending existing LLM frameworks with additional implementations in C++ and CUDA, while the offline component utilizes a Python-based profiler and solver to categorize neurons and construct a neuron placement policy. PowerInfer's flexible configuration supports a wide range of LLM families and GPU types, from the high-end NVIDIA RTX 4090 to the older RTX 2080Ti models. Notably, even on consumer-grade GPUs, PowerInfer achieves performance close to that of server-grade GPUs without sacrificing accuracy.

Evaluations and Insights

Performance evaluations show that PowerInfer outpaces existing alternatives, offering considerable speedups in token generation rates for quantized and non-quantized models. Moreover, PowerInfer maintains near-identical accuracy across various LLM models and tasks, ensuring that the efficiency gains are not at the expense of performance quality.

Conclusion

The paper presents PowerInfer, an inference system that harnesses the power-law distribution in neuron activation to optimize the efficiency of local LLM deployments. By strategically splitting the workload between GPU and CPU and focusing on computational locality, PowerInfer affirms its potential as a solution to the challenge of running LLMs on personal computers effectively.