Evaluating the Code Editing Capabilities of LLMs

The paper entitled "Can It Edit? Evaluating the Ability of LLMs to Follow Code Editing Instructions" addresses a niche yet increasingly important field of paper within AI-driven software engineering: code editing tasks performed by LLMs. Until this paper, the primary focus has been on code synthesis tasks, which range from generating code based on natural language to developing tests and code explanations. Recognizing a gap in the literature, this research pivots to investigate how well LLMs handle the specific directives involved in modifying pre-existing code.

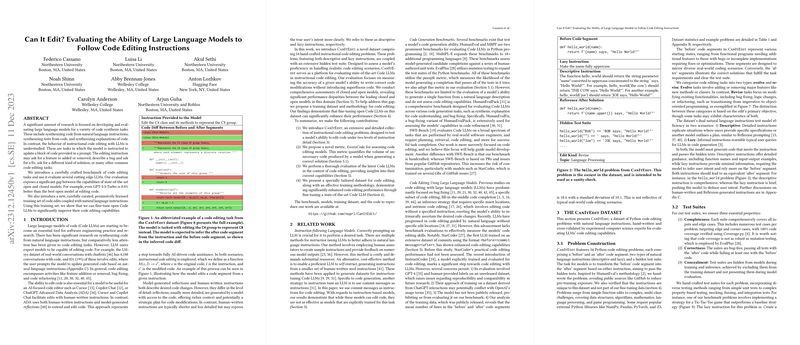

This research introduces a custom benchmark, C, designed for instructional code editing, to rigorously evaluate various LLMs' performance. Key tasks analyzed involve models receiving specific code segments paired with instructions to add features, identify and resolve bugs, or apply alternative solutions. Through this lens, the authors expose disparities between major LLMs, notably indicating that the proprietary GPT-3.5-Turbo surpasses leading publicly accessible models in code editing performance.

The paper makes significant contributions through its establishment of the C benchmark, consisting of 105 curated code editing problems spanning various computer science sectors, and a unique metric titled ExcessCode, which quantifies code surplus generated by LLMs during correct code alterations. By also curating a permissively licensed dataset for training LLMs on these tasks, the authors manage to close the performance gap between open and closed-source models. The adjusted models, referred to as E, demonstrate markedly enhanced capabilities in code editing after targeted fine-tuning on this dataset.

Their empirical evaluation finds that closed-source models like GPT-4 still lead in performance, surpassing newly fine-tuned open models in task accuracy for complex code edits as evident on the C benchmark. However, models fine-tuned with task-specific data, like E, are revealed to be strong contenders, especially for structural code tasks. In particular, these results suggest that targeted data and training methodologies are pivotal in bolstering open code LLMs' abilities.

The implications of this paper are multifaceted:

- Benchmarking: It provides a replicable standard for future assessments of LLMs in code editing, allowing for more direct comparisons of model capabilities.

- Training Paradigms: Insights from the dataset and fine-tuning strategies suggest that models historically categorized for code synthesis can attain substantial improvements in code editing through dedicated datasets and meticulous instruction-tuning.

- Open vs. Closed Models: The findings indicate that improvements in open-source models could eventually reduce reliance on proprietary systems, with broad implications on accessibility and further innovation in AI-driven code platforms.

As LLMs evolve, these findings motivate ongoing exploration of code editing with nuanced data-honing techniques, providing a springboard for more accessible, AI-facilitated software development. This work highlights the potential of LLMs not only in creating new code but also in enhancing and refactoring existing code bases, thereby contributing significantly to software development cycles across various domains. Future advancements could see the integration of such models into IDEs, further streamlining the coding process and enhancing developer productivity.