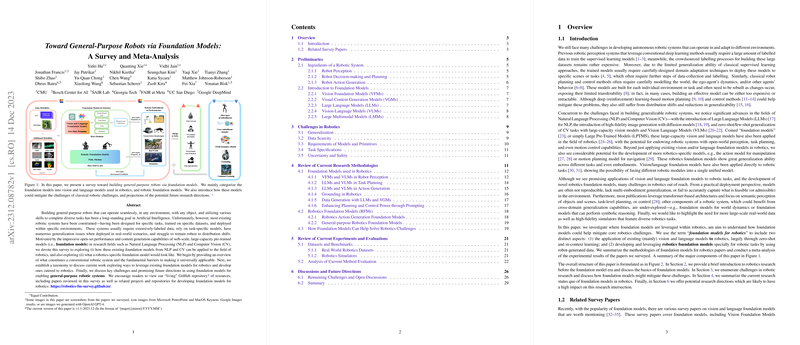

Evolution of Robotics with Foundation Models

Introduction to Foundation Models in Robotics

The field of robotics has long been focused on developing systems shaped for particular tasks, trained on specific datasets, and limited to defined environments. These systems often suffer from challenges such as data scarcity, lack of generalization, and robustness when faced with real-world scenarios. Encouraged by the success of foundational models in NLP and computer vision (CV), researchers are now exploring their application to robotics. Foundation models like LLMs, Vision Foundation Models (VFMs), and others possess qualities that align well with the vision for general-purpose robots—those that can seamlessly operate across various tasks and environments without extensive retraining.

Robotics and Foundation Models

Robotics systems comprise several core functionalities, including perception, decision-making and planning, and action generation. Each of these functionalities presents its own set of challenges. For example, perception systems need varied data to understand scenes and objects, while planning and control must adapt to new environments. The entry of foundation models into this domain aims at leveraging their strong generalization and learning abilities to address these hurdles, potentially smoothing the path toward truly adaptable and intelligent robotic systems.

Addressing Core Robotics Challenges

Foundation models shine brightly when examining their impact on classical challenges in robotics:

- Generalization: Taking cues from the human brain's modularity and the adaptability seen in nature, foundation models offer a promising route to achieve a similar level of function-centric generalization in robotics.

- Data Scarcity: Through the ability to generate synthetic data and learn from limited examples, foundation models are positioned to tackle the constraints imposed by the requirement for large and diverse datasets.

- Model Dependency: Reducing the reliance on meticulously crafted models for the environment and robot dynamics can be advanced with model-agnostic foundation models.

- Task Specification: Foundation models open up avenues for natural and intuitive ways of specifying goals for robotic tasks, such as through language, images, or code.

- Uncertainty and Safety: Ensuring safe operation and managing uncertainty remain underexplored, but are areas where foundation models could potentially offer rigorous frameworks and contributions.

Research Methodologies and Evaluations

Numerous studies have explored applying foundation models to various tasks, leading to several observations:

- Task Focus: There's a notable skew toward general pick-and-place tasks. The translation from text to motion, particularly with LLMs, has been less ventured into, especially for complex tasks like dexterous manipulation.

- Simulation and Real-World Data: The balance between simulations and real-world data is critical. Robust simulators enable vast data generation, yet may lack the diversity and richness of real-world data, highlighting the need for ongoing efforts in both areas.

- Performance and Benchmarking: Advancements are being made in testing foundation models in diverse tasks, but a unified approach to performance measurement and benchmarking is yet something to develop.

Future Directions in Foundation Models and Robotics

Looking ahead, several areas are ripe for exploration:

- Enhanced Grounding: Developing a profound connection between model output and physical robotic actions remains a fruitful avenue for research.

- Continual Learning: Adapting to changing environments and tasks without forgetting past learning is a frontier yet to be fully conquered by robotic foundation models.

- Hardware Innovations: Complementary hardware innovations are necessary to enrich the data available for training foundation models and to expand the conceptual learning space.

- Cross-Embodiment Adaptability: Learning control policies that are adaptable to diverse physical embodiments is a critical step toward creating more universal robotic systems.

The application of foundation models to robotics holds the promise of achieving a higher level of autonomy, adaptability, and intelligence in robotic systems. As the field progresses, the blend of robust AI models and robotics could usher in a new era of smart, versatile machines ready to meet the complexities and unpredictability of the real world.