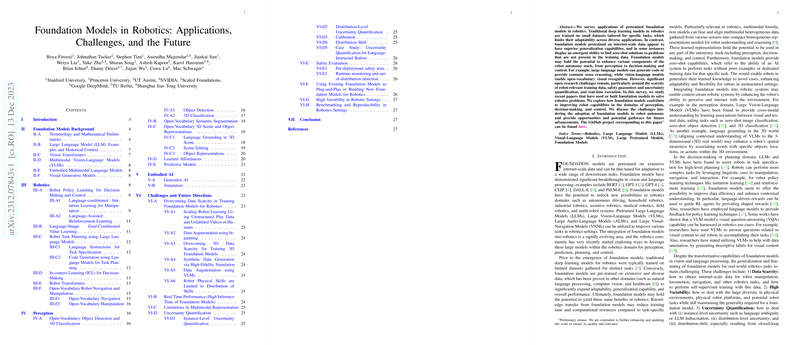

Understanding Foundation Models in Robotics

Introduction to Foundation Models

Foundation models are a type of machine learning model that is pre-trained on massive, diverse data sets, enabling them to learn general-purpose representations and skills. These models can then be fine-tuned or adapted to a wide array of downstream tasks. Examples include BERT for text processing and GPT for text generation, as well as models like CLIP and DALL-E that work across both vision and language. In robotics, these models hold promise for enhancing perception, decision-making, control, and even task planning. They can generate code, provide common-sense reasoning, and recognize visual concepts in an open-ended manner. However, realizing their potential in robotics also presents unique challenges, particularly regarding training data scarcity, safety, uncertainty quantification, and achieving real-time performance.

Applications and Advancements

Foundation models offer significant advancements for robotics in several areas:

- Decision Making and Control:

- Robots can learn policies from human demonstrations, including from unstructured play data, which is easier to collect.

- Robots can be trained to respond to language instructions and reinforcement learning signals, integrating models like GPT-3 for task decomposition and achieving a human-like understanding of instructions and control signals.

- Perception Capabilities:

- Open-vocabulary object detection enables robots to identify and classify objects they have never encountered, with models like GLIP, OWL-ViT, and DINO enhancing object-level recognition.

- Semantic segmentation leverages LLMs to classify each pixel in an image with semantic meaning, aiding in tasks like scene understanding and navigation.

- Embodied AI and Generalist Agents:

- Research in embodied AI focuses on using foundation models to endow robots with versatile skills, such as navigation and task planning.

- Generalist agents are trained on various simulations or real-world tasks to become adaptable across multiple scenarios and tasks.

Challenges in Robotic Integration

Incorporating foundation models into robotics comes with several challenges:

- Training Data Scarcity:

- Robotics-specific data is limited compared to the internet-scale text and image data used to train many foundation models.

- Techniques to tackle this issue include leveraging unstructured play data, data augmentation methods, and high-fidelity simulators.

- Uncertainty and Safety in Decision Making:

- Since foundation models can sometimes produce incorrect outputs, quantifying uncertainty and ensuring safety in robotic applications is crucial.

- Research efforts focus on uncertainty quantification methods that give robots the ability to ask for help when unsure, ensuring more reliable operations.

- Real-Time Performance:

- The high inference times of foundation models pose a bottleneck for real-time robotic applications, demanding further research in computational efficiency and network reliability.

- Variability in Robotic Settings:

- Robots operate in diverse environments with different physical attributes and tasks. Creating general-purpose, cross-embodiment foundation models that capture a wide range of robotic data is essential for broader applicability.

- Benchmarking and Reproducibility:

- The variation in simulation environments and hardware specifics makes benchmarking and reproducing results challenging. Open hardware initiatives and transparent experimental setups can help address this issue.

The Road Ahead

The integration of foundation models in robotics is an active area of development. Future research directions include creating reliable, real-time capable models, generating robotics-specific training data, and building safety mechanisms for autonomous operations. The ultimate goal is to develop versatile robots that can operate safely and effectively in complex real-world scenarios, leveraging the vast learning potential of foundation models.