Introduction

The enhancement of LLMs (LMs) traditionally relies on a corpus of human-generated data for fine-tuning, improving their performance on various tasks. However, the availability and quality of such data is a limiting factor in model development. This paper investigates an alternative approach using model-generated data bolstered by scalar feedback, such as binary correctness indicators in math problems.

Self-Training with ReST-EM

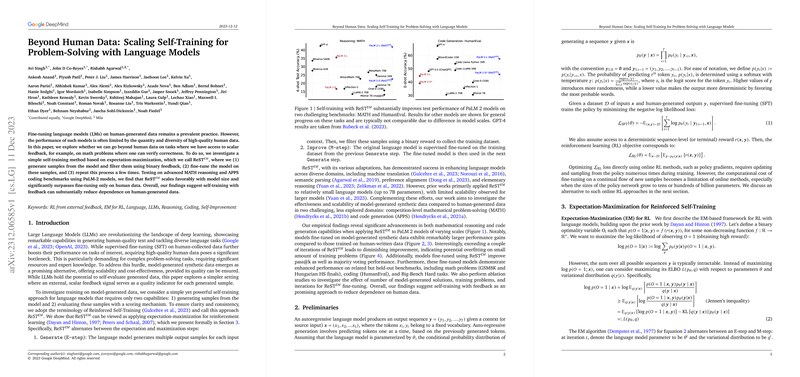

The core of the paper is the self-training method, dubbed ReST-EM, based on the well-established expectation-maximization algorithm. This technique involves a two-step process per iteration: generating data samples using the model and filtering them based on provided feedback, then fine-tuning the model on these filtered samples. The process iterates, building on the enhanced performance achieved in each step.

When applied to sophisticated math reasoning and coding problems using different scales of the PaLM-2 model, the results indicate that ReST-EM is a scalable method that significantly outperforms traditional fine-tuning on human data. This suggests a potential for reduced reliance on human-generated datasets in LLM training.

Preliminaries and Methodology

In its essence, an autoregressive LLM predicts text sequences one token at a time. Reinforcement learning (RL) approaches typically involve tuning models using a reward function. However, the computational cost of fine-tuning a model via RL is substantial. ReST-EM offers a solution to this by decoupling data generation and policy optimization, allowing for easier scaling.

The paper details the ReST-EM algorithm, emphasizing the iterative nature of the Generate and Improve steps in self-training, which are designed to refine the policy network responsible for the model's output samples. The key novelty lies in fine-tuning the model based on a reward function reflecting the quality of the output, which can lead to self-improvement over iterations.

Empirical Findings and Analysis

Significant advancements in performance have been achieved on challenging benchmarks in math problem-solving and code generation when using ReST-EM. The method shines when applied iteratively, showing substantial improvements with each cycle, although it's crucial to monitor for overfitting.

The paper further reveals that fine-tuned models not only excel in their respective training tasks but also demonstrate enhanced capabilities in related areas. Ablation studies within the paper suggest that the effectiveness of ReST-EM scales positively with the amount of model-generated solutions and the size of training problems. The findings underline the method's potential as a highly efficient way to improve LLMs.

Conclusion

In conclusion, the work presents a formidable technique for enhancing LMs without heavy reliance on human data. ReST-EM stands out as a promising route for LLM advancement, potentially alleviating the bottleneck of high-quality data scarcity. The paper serves as a foundation for future exploration into the self-improvement of LLMs, aspiring to further reduce human data dependence and improve computational efficiency.