An Overview of Reinforced Self-Training (ReST) for LLMing

The research paper titled "Reinforced Self-Training (ReST) for LLMing" introduces a novel algorithm designed to address the challenges associated with the alignment of LLMs with human preferences. The primary objective of the Reinforced Self-Training (ReST) approach is to facilitate the efficient alignment of LLM outputs by leveraging reinforcement learning from human feedback (RLHF), particularly within the domain of machine translation.

Methodological Framework

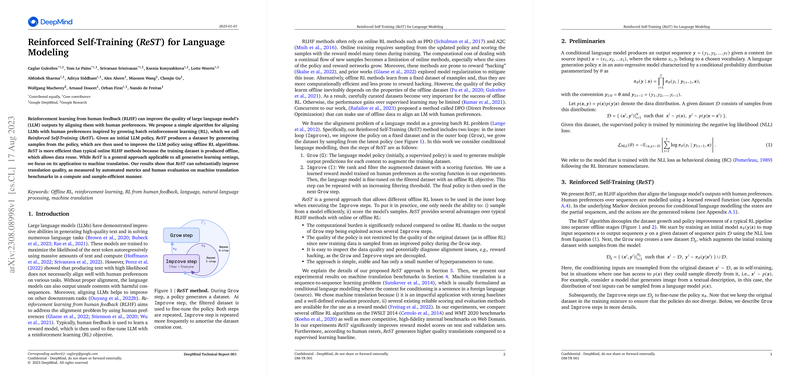

ReST is an algorithm inspired by growing batch reinforcement learning (RL) techniques. It focuses on offline RL methods to optimize LLMs, addressing the high computational costs and potential reward hacking associated with standard online RL methodologies like PPO and A2C. The core structure of ReST is composed of two main iterative processes: Grow and Improve steps.

- Grow Step: During this phase, the initial LLM policy generates an augmented dataset of output predictions based on sampled inputs. This data generation occurs offline, enabling the storage and reuse of training samples, which contrasts with the online requirement to continually produce new data samples during training.

- Improve Step: The augmented dataset from the Grow step is ranked and filtered using a scoring function derived from a reward model trained on human preferences. The policy is then fine-tuned using this filtered dataset, with successive iterations employing an increasing filtering threshold. This repetitive fine-tuning aims to optimize the LLM policy iteratively.

A key advantage of ReST is its ability to employ different offline RL algorithms during the Improve phase, enhancing its adaptability across various generative learning tasks. Moreover, the dataset's quality in offline settings can be robustly handled by selecting high-quality samples, thereby facilitating significant performance improvements over purely supervised learning approaches.

Empirical Evaluation

The paper extensively evaluates ReST within the field of machine translation, utilizing established benchmarks such as IWSLT 2014 and WMT 2020 across different language pairs. The evaluation is based on both automated metrics and human evaluation, demonstrating that ReST can substantially enhance model performance compared to baseline supervised learning models. Notably, ReST variants exhibit superior reward model scores, further benefiting from techniques like Best-of-N sampling during inference. Moreover, human evaluations indicate that ReST outperforms traditional models, though some discrepancies exist between reward models and human alignment, suggesting areas for potential refinement.

Implications and Future Directions

ReST's design presents several implications for the future development of RLHF techniques in natural language processing:

- Scalability and Computational Efficiency: By capitalizing on offline data production, ReST significantly reduces computational demands compared to traditional online RL approaches, making it a scalable option for large-scale LLMs.

- Robust Policy Improvement: Through the iterative Grow and Improve steps, ReST provides a structured methodology for policy enhancement, which can be flexibly adapted with various offline RL frameworks, such as behavior regularization strategies like BVMPO and GOLD.

- Addressing Reward Misalignment: The research highlights the potential gaps between reward models and human evaluations. Future work might explore dynamic adjustments to reward models during ReST iterations or investigate hybrid approaches combining online feedback mechanisms.

- Broader Applicability: While the paper focuses on machine translation, ReST's framework is generalizable to other language generation tasks, such as summarization and dialogue systems, offering a potent tool for broader AI applications.

Overall, ReST represents a promising methodology that blends the computational efficiency of offline RL with the robust iterative improvement capabilities necessary for aligning LLMs with human preferences, marking a significant contribution to the advancement of RLHF in LLMing.