Understanding the Effect of Model Compression on Social Bias in LLMs

The paper "Understanding the Effect of Model Compression on Social Bias in LLMs" investigates an underexplored area in the development of LLMs: the relationship between model compression techniques and the persistence of social biases within these models. This paper is pivotal for both theoretical understanding and practical advancements in the deployment of LLMs, as it bridges the gap between reducing computational burdens and mitigating biases.

Summary and Key Findings

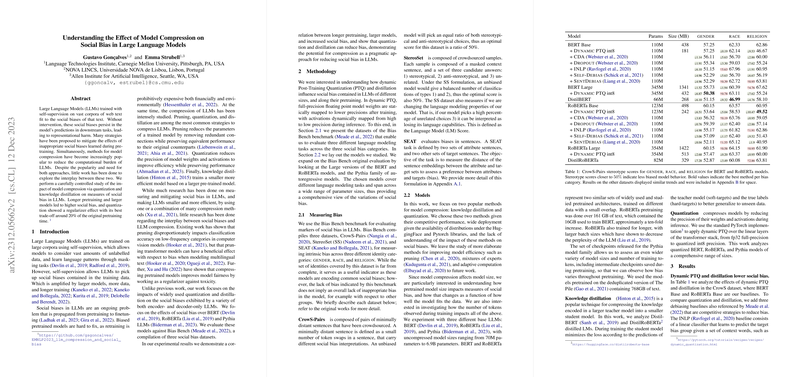

LLMs are trained on massive datasets that inherently contain social biases. As these models evolve in size and capability, they tend to amplify these biases, thus creating challenges in ensuring fair and unbiased outputs in downstream tasks. This paper specifically focuses on two model compression techniques: quantization and knowledge distillation, examining their effects on social biases within BERT, RoBERTa, and Pythia models.

The research methodology utilizes a range of models of varying sizes and training extents to investigate the changes in bias metrics throughout the pretraining process. Evaluations are carried out using the Bias Bench benchmark, which encompasses three datasets focused on different social identities, allowing for a comprehensive examination of gender, race, and religion biases.

Notable findings from their experiments include:

- Compression as a Bias Mitigator: Both dynamic Post-Training Quantization (PTQ) and knowledge distillation exhibited potential in reducing social biases without significantly diminishing LLM (LM) performance. The paper underscores that while distillation often compromises LM efficacy, PTQ presents a more favorable balance between bias reduction and model capability preservation.

- Correlation Between Model Size and Bias: Larger model sizes and prolonged pretraining were associated with increased social biases. This correlation emphasizes the need for effective bias mitigation strategies, particularly as models scale.

- Inconsistent Bias Behavior Across Categories: The performance of models against different bias categories (gender, race, religion) varied. The inability of a singular model size to address all bias categories effectively reveals the nuanced nature of bias propagation in LLMs.

- Regularizing Effect of PTQ: Applying PTQ consistently across model types and sizes demonstrated a regularizing effect, suggesting its dual role in reducing both computational load and social biases.

Implications and Future Directions

This paper provides a dual lens on model compression as both an efficiency-enhancement tool and a prospective bias regulator. The immediate implications include advocating for PTQ as a standard practice in the deployment of LLMs, not just from a computational efficiency standpoint but also as a preemptive bias mitigation strategy.

The paper opens avenues for further research into more sophisticated compression techniques, such as pruning and adaptive computation, that might further decouple model efficiency from bias perpetuation. Additionally, refining bias evaluation datasets to include a broader array of biases, and extending them beyond Western-centric views, could provide a more holistic understanding of LLM fairness in global contexts.

Conclusion

This research offers a crucial contribution to the field of LLMs by addressing the intersection of model efficiency and ethical AI development. By showcasing the impact of compression techniques on social biases, it invites a rethinking of model deployment strategies in academia and industry. The findings serve as a foundation for bringing about balanced advancements in technology where model utility does not come at the cost of fair and unbiased language processing.