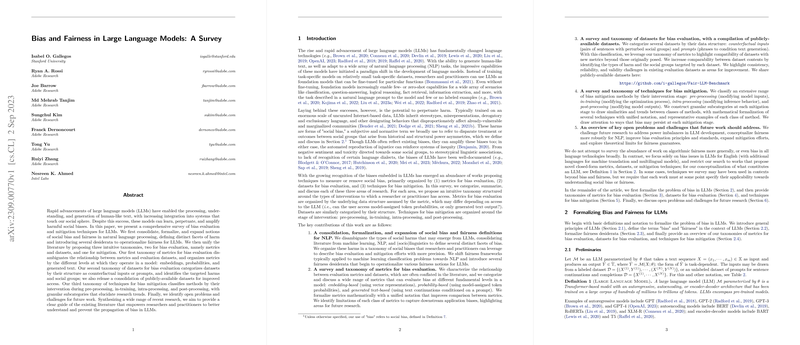

Comprehensive Survey on Bias and Fairness in LLMs

Bias and Fairness Definitions and Effects

This survey meticulously navigates through the complexities of bias and fairness within the domain of NLP, particularly focusing on LLMs. It initiates by diving into the multifaceted nature of social bias, detailing various manifestations such as stereotyping and derogatory language that predominantly affect marginalized communities. Furthermore, it introduces a nuanced taxonomy of social biases, which aids in systematically addressing the diverse range of biases encountered in LLMs.

Metrics for Bias Evaluation

The survey proposes an elaborate framework for bias evaluation, differentiated by metrics and datasets. It presents an innovative taxonomy that distinguishes evaluation metrics based on their operation on embeddings, model-assigned probabilities, or generated text. This section emphasizes the fluctuating reliability of metrics in capturing downstream application biases and underlines the importance of a metric's contextuality in evaluating bias.

Datasets for Bias Evaluation

Critically, the survey addresses the organization and categorization of datasets utilized in bias evaluation. It differentiates datasets into two primary types: counterfactual inputs and prompts, each with distinct structures and applications in evaluating bias. This discussion raises awareness of the reliability and representativeness of these datasets, advocating for increased precision in documenting dataset creation processes and their intended utilities.

Techniques for Bias Mitigation

In an investigative manner, the survey dissects bias mitigation techniques, placing them into four pivotal categories: pre-processing, in-training, intra-processing, and post-processing. Each category is meticulously explored, highlighting granular strategies under each mitigation phase. This comprehensive examination sheds light on the broad spectrum of methodologies aiming to curb bias dissemination through LLMs, ranging from altering model inputs to modifying model outputs.

Future Challenges and Directions

The paper does not conclude without forwarding critical contemplations and challenges impending the quest for fairness in LLMs. It underscores the imperative to remold fairness notions for NLP tasks, iterates the necessity for inclusivity beyond predominant geolinguistic contexts, and accentuates a methodological reevaluation towards eradicating biases without exacerbating marginalization. Moreover, it proposes methodological synergy as a pathway to amplifying mitigation effectiveness, alongside the pivotal role of theoretical underpinnings in anchoring bias mitigation strategies.

Conclusions

By synthesizing a wealth of recent research, this survey advances the dialogue on bias and fairness in LLMs, offering a robust framework for evaluating biases and elucidating a compendium of mitigation techniques across the model lifecycle. Through its analytical lenses, it beckons further scholarly inquiry and technical innovation towards cultivating LLMs that are not merely reflective of our current socio-linguistic fabric but are conscientiously sculpted to foster a more equitable digital communication field.