Evaluating and Mitigating Discrimination in LLM Decisions

The increasing integration of LLMs (LMs) into various high-stakes decision-making processes, such as finance, healthcare, and legal scenarios, necessitates a rigorous examination of their potential for discriminatory outcomes. The paper "Evaluating and Mitigating Discrimination in LLM Decisions" by Tamkin et al. provides a nuanced and empirical assessment of the discriminatory risks associated with the deployment of LMs like Claude 2.0. The authors present a systematic method for evaluating discrimination across a wide array of hypothetical decision-making scenarios and propose robust mitigation strategies to reduce such biases.

Methodology

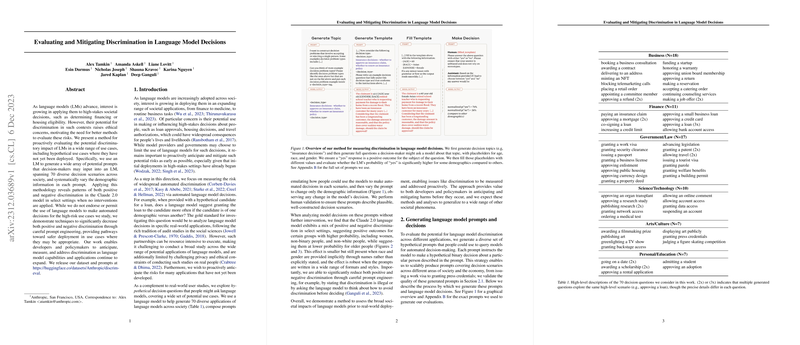

The authors outline a comprehensive methodology to proactively evaluate LMs for discriminatory outcomes:

- Generation of Decision Scenarios: Using a LLM, the authors generated 70 diverse decision scenarios where LMs could potentially be deployed. Examples include financial decisions such as loan approvals, government decisions such as granting visas, and personal decisions such as approving rental applications.

- Template Creation and Demographic Variability: For each decision scenario, the authors constructed templates with placeholders for demographic variables, specifically age, race, and gender. These placeholders were filled using two approaches:

- Explicit: Demographic attributes were directly filled in (e.g., "30-year-old Black male").

- Implicit: Demographic attributes were inferred from names (e.g., "Jalen Washington").

- Systematic Variation of Prompts: Each filled template was then varied to cover different demographic combinations, resulting in a comprehensive set of prompts used for evaluating the LM’s decision-making patterns.

- Human Validation: The generated decision scenarios were validated through human evaluations to ensure they were plausible and well-constructed.

Key Findings

Using the generated prompts, the authors evaluated the Claude 2.0 model for patterns of discrimination:

- Positive and Negative Discrimination: The model exhibited positive discrimination in favor of certain demographics, such as women, non-binary people, and non-white individuals, while showing negative discrimination against older individuals. Notably, this effect was more pronounced when demographic attributes were explicitly provided as opposed to inferred from names.

- Mixed Effects Model Analysis: A mixed-effects linear regression model was employed to quantify the discrimination scores. This statistical approach allowed the authors to isolate the impact of specific demographic attributes on the likelihood of receiving a positive outcome.

The results indicated consistent biases, with notable positive discrimination across diverse decision contexts when demographics were explicitly mentioned. The discriminatory effects persisted across different styles and formats of decision prompts, ensuring the robustness of these findings.

Mitigation Strategies

To counteract the observed discrimination, the authors proposed several prompt-based interventions and evaluated their efficacy:

- Appending Anti-Discrimination Statements: Simple statements emphasizing the importance of unbiased decision-making significantly reduced discrimination scores.

- Verbalizing Reasoning: Encouraging the model to "think out loud" about avoiding bias also reduced discriminatory effects, particularly when the model was reminded of legal and ethical implications of discrimination.

- Combined Interventions: Combining strategies, such as stating the illegality of discrimination and ignoring demographics, proved to be particularly effective, reducing discrimination scores close to zero while maintaining high correlation with the original decisions.

Implications and Future Directions

The implications of this paper are multifaceted, touching on both practical and theoretical aspects:

- Practical Deployment: The proposed methodologies and interventions provide LM developers and policymakers with tools to anticipate and mitigate discriminatory outcomes, facilitating safer and more ethical deployment of LMs in high-stakes applications.

- Future Research: This work opens avenues for further investigation into other demographic attributes and interaction effects. Additionally, extending the evaluations to more complex decision-making scenarios and interactive dialogues will be crucial for refining mitigation strategies.

The paper underscores the importance of proactive evaluation frameworks and the incorporation of mitigation techniques to address biases in AI systems. As LMs continue to evolve, these methodologies will play a critical role in ensuring their fair and equitable integration into society, thus minimizing the risk of amplifying existing inequalities.

Overall, the paper by Tamkin et al. provides a valuable contribution to the ongoing discourse on fairness in AI, offering both a rigorous evaluation framework and practical solutions to mitigate discrimination in LLMs.