Discrimination in the Age of Algorithms: An Expert Analysis

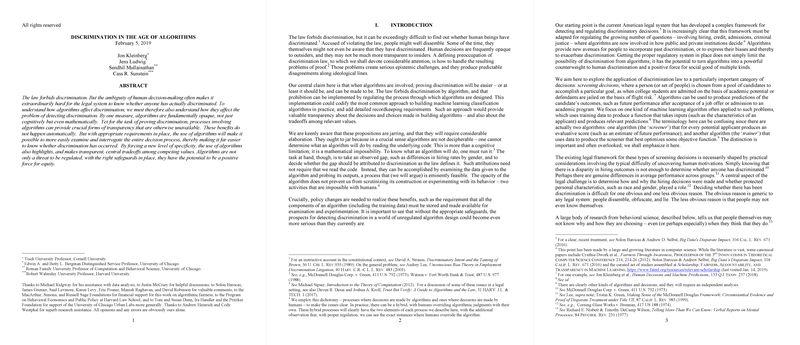

The paper entitled "Discrimination in the Age of Algorithms" by Jon Kleinberg, Jens Ludwig, Sendhil Mullainathan, and Cass R. Sunstein addresses the intersection of legal frameworks and algorithmic decision-making, particularly focusing on the potential for algorithms to either mitigate or exacerbate discrimination. This comprehensive paper evaluates how algorithms impact the detection and mitigation of discriminatory practices, proposing that, with proper regulation, algorithms could significantly enhance transparency and fairness in decision-making processes traditionally marred by human bias.

The authors identify the challenge of proving discrimination within human systems, noting the complexity of uncovering motivations behind disparate treatment and disparate impact. They argue that algorithms, though often criticized for their opaqueness, offer a unique opportunity to systematize and scrutinize decision processes in ways that are not feasible with human judgment. By making design processes, training data, and algorithmic inputs transparent, algorithms can be audited and improved to minimize bias.

Key Considerations

- Transparency and Regulation: The paper underscores the necessity of regulation, especially in how algorithms are trained, the data used, and the outcomes they target. Transparency in these factors can make discrimination easier to detect and address.

- Iterative Nature of Algorithm Construction: The authors distinguish between two kinds of algorithms—the screener and the trainer. The trainer uses historical data to produce a screener that predicts outcomes such as job performance or creditworthiness. Transparency in these processes, specifically around data usage and objective functions, can uncover biases that may be baked into models.

- Human Elements in Algorithmic Decisions: There's a critical need to regulate the human decisions involved in creating algorithms, especially when choosing data sets and defining objectives. These decisions can lead to disparate impact or treatment if not managed properly, emphasizing the dual responsibility of monitoring human and algorithmic biases.

- Implications of Algorithmic Decisions: Algorithms, with their ability to quantify trade-offs, provide a clearer picture of the potential impacts of different decision-making strategies in diverse fields such as employment, criminal justice, and education. This aspect can help in evaluating and potentially reconciling fairness and efficiency.

- Potential for Disparate Benefits: Beyond reducing discrimination, algorithms can improve accuracy in decision-making, which often benefits historically disadvantaged groups. The paper highlights this potential for "disparate benefit," indicating cases where more accurate predictions could enhance social equity.

Future Developments in AI and Fairness

The authors suggest that algorithms might not only maintain fairness in decision processes but, if developed and implemented with transparency and accountability, might also advance equity. Regulation of algorithmic decision-making could transform algorithms into tools of social good, ensuring they are harnessed to counteract human biases where possible.

Thus, algorithms represent a powerful, if complex, means to address deep-seated issues of discrimination within systems of decision-making. The paper implies that future advances in AI fairness should aim to refine methods for transparency and the regulation of algorithms while carefully considering ethical implications in diverse applications.

In conclusion, the paper provides a rigorous exploration of discrimination within the context of algorithmic processes, posing both challenges and opportunities. Its insights encourage ongoing dialogue and development towards effectively integrating equitable algorithms across various societal domains.