Analyzing OPERA: Addressing Hallucination in Multi-Modal LLMs

The paper "OPERA: Alleviating Hallucination in Multi-Modal LLMs via Over-Trust Penalty and Retrospection-Allocation" explores an innovative approach to mitigate hallucination in Multi-Modal LLMs (MLLMs). The authors propose a novel decoding method, OPERA, which aims to reduce hallucination without incurring additional training costs or requiring supplementary data.

Context and Motivation

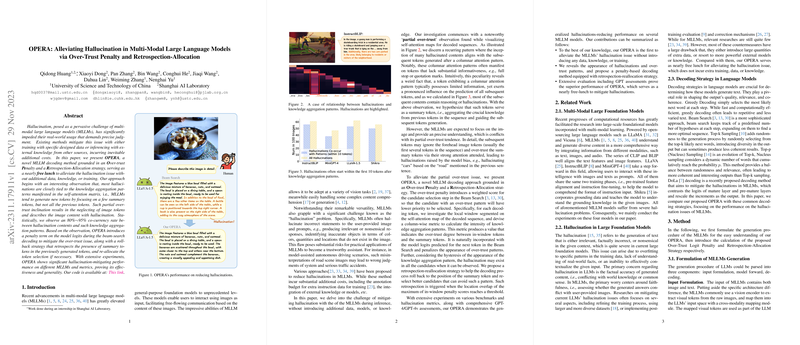

Recent advancements in MLLMs have significantly increased the capability of foundation models to process and understand diverse modalities, chiefly combining text and image inputs. These models can perform complex reasoning tasks and generate content based on visual cues. Nevertheless, they encounter a profound issue known as hallucination, where the model outputs irrelevant or factually incorrect information. Hallucination poses significant challenges, especially in applications demanding high precision, such as autonomous driving and medical image analysis.

Existing strategies to address hallucination often involve retraining models with additional data or incorporating external knowledge, leading to increased computational and financial costs. OPERA diverges from these by offering what the authors describe as a "nearly free lunch", thus unraveling a new dimension in decoding techniques for not only enhancing accuracy but also ensuring cost-effectiveness.

Key Contributions

OPERA is grounded in two primary concepts: Over-Trust Penalty and Retrospection-Allocation.

- Over-Trust Penalty: The authors have identified a pattern where hallucinations are likely caused by over-reliance on certain summary tokens during the generation process, as identified in the self-attention mechanism of MLLMs. This causes a failure to effectively incorporate visual token information, leading to irrelevant content generation. OPERA introduces a penalty term in the beam-search decoding process to counteract this over-trust, promoting a balanced attention distribution across all tokens.

- Retrospection-Allocation: A rollback strategy allows the model to reassess and potentially revise inappropriate token predictions. By evaluating previously generated tokens with regard to the presence of uninformative summary tokens, OPERA enables the reallocation of tokens, improving the coherence and relevance of the generated content.

Experimental Results

The paper reports substantial improvement in hallucination mitigation performance across various MLLMs on metrics like CHAIR, POPE, and evaluations supported by GPT-4 and GPT-4V. OPERA demonstrates up to a 27.5% improvement in GPT-4V accuracy scores over traditional methods. Moreover, it maintains high quality in generated text, as observed through lower perplexity scores and positive human evaluation results on grammar, fluency, and naturalness.

Implications and Future Directions

The development of OPERA holds significant implications for both the theoretical understanding and practical deployment of MLLMs. By reducing model hallucination without additional training or external data dependencies, OPERA enhances the reliability and applicability of MLLMs in critical domains where accuracy is paramount.

Future research could focus on further refining OPERA's techniques, particularly its ability to handle a broader range of hallucinations beyond the scope of object identification. Moreover, extending the generalizability to different architectures and exploring more sophisticated metrics for detecting knowledge aggregation patterns could provide additional robustness.

In summary, this paper contributes a significant advancement in decoding strategies for MLLMs, focusing on minimizing hallucination through innovative methods that emphasize cost-efficiency and model efficacy. As AI models continue to evolve, such developments will be crucial in improving their functional trustworthiness across diverse real-world applications.