Alleviating Hallucination in Large Vision-LLMs with Active Retrieval Augmentation

The paper "Alleviating Hallucination in Large Vision-LLMs with Active Retrieval Augmentation" addresses a prevalent issue within Large Vision-LLMs (LVLMs): hallucination. Hallucination occurs when these models generate semantically plausible but factually incorrect responses to queries about images. The research introduces an innovative framework called the Active Retrieval-Augmented large vision-LLM (ARA), designed to mitigate hallucinations by augmenting LVLMs with external knowledge through smart retrieval methodologies.

Key Contributions

This paper is grounded in three main contributions that significantly enhance the LVLMs’ ability to produce more accurate and reliable outputs:

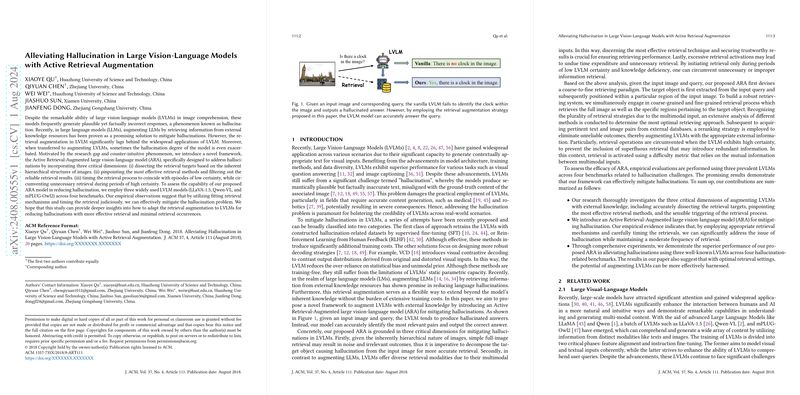

- Coarse-to-Fine Retrieval Framework: Understanding the hierarchical structure of images, the paper proposes a dual-phase retrieval mechanism where both coarse (full-image) and fine-grained (object-specific) retrieval processes are employed. This nuanced approach ensures that both the broader context and specific details of the images are factored into the retrieval operations.

- Optimal Retrieval Methods: The paper systematically evaluates and identifies the most effective retrieval techniques. This involves comparing various methods of utilizing visual and textual information from the database to augment the model's internal knowledge. The paper also explores the integration of different embedding models to optimize retrieval.

- Active Triggering Based on Query Difficulty: To avoid unnecessary retrievals and enhance efficiency, the ARA model includes a mechanism that selectively triggers retrieval processes based on the estimated difficulty of queries. This is determined using a mutual information-based difficulty metric that assesses the dependency of the model’s output on the visual input.

Methodology

Input and Decoding in LVLMs

The input to LVLMs includes both visual and textual data, which are processed to generate a sequence of tokens representing the image and the accompanying text. The retrieval-augmented decoding approach is then employed where external information is smartly integrated based on the retrieval outcome.

Coarse-to-Fine Hierarchical Retrieval

- Coarse-Grained Retrieval: Utilizes the CLIP model to extract embeddings from both input images and a large database to retrieve visually similar images. This retrieved information provides broad contextual data that enhances the model's understanding.

- Fine-Grained Retrieval: Targets specific objects depicted within the images. By using a LLM to extract key entities from queries and grounding techniques to locate these entities within the images, the fine-grained retrieval hones in on the most relevant sections of the image, offering detailed and focused external knowledge.

Advanced Reranking and Joint Decoding Mechanisms

Once the retrieval processes are completed, the results are refined through a reranking strategy that ranks the retrieved data based on semantic similarity, ensuring that the most relevant information is utilized. The comprehensive decoding method further integrates these refined results to output more accurate responses.

Experimental Evaluations

The efficacy of the ARA model was validated through extensive empirical evaluations on three LVLMs (LLaVA-1.5, Qwen-VL, and mPLUG-Owl2) across multiple datasets and benchmarks such as POPE, MME, MMStar, and MMbench. The results showcase substantial improvements in mitigating hallucinations:

- POPE Benchmark: Demonstrated significant enhancements in accuracy, precision, and recall across random, popular, and adversarial settings.

- MME Subset: Notable improvements in both object-level and attribute-level hallucination scores, with consistent performance increases across all subsets.

- MMStar and MMbench: Showed superior performance, particularly in subsets requiring augmented reasoning capabilities, reinforcing the effectiveness of the retrieval augmentation.

Discussion and Future Directions

The findings of this paper pave the way for further exploration and refinement in the domain of retrieval-augmented generation. The promising results indicate that smart retrieval mechanisms tailored to trigger only when necessary can substantially improve the reliability and accuracy of LVLMs. Future developments could focus on enhancing the granularity of retrieval methods, refining confidence metrics for triggering retrieval, and expanding the external knowledge database to cover a wider array of topics.

Conclusion

The paper "Alleviating Hallucination in Large Vision-LLMs with Active Retrieval Augmentation" presents significant advancements in addressing the hallucination problem in LVLMs through an innovative retrieval-augmented framework. By incorporating hierarchical retrieval processes, optimizing retrieval methods, and intelligently triggering retrievals based on query difficulty, the ARA model effectively enhances the accuracy and reliability of LVLM outputs, demonstrating promising improvements across multiple benchmarks and model architectures. This research sets a notable precedent for the continued refinement and application of retrieval-augmented generation in vision-LLMs.