Introduction

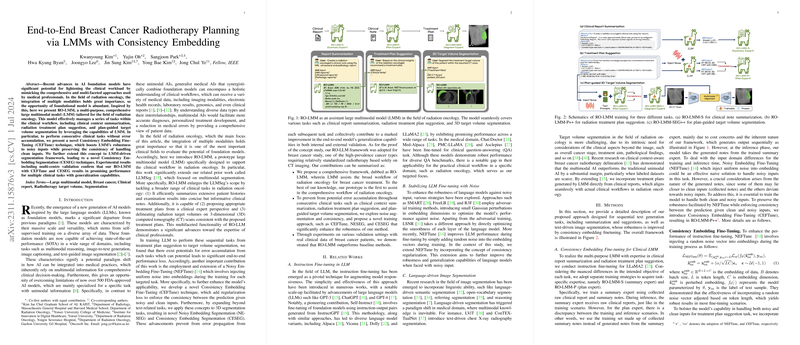

AI has dramatically impacted the medical field by providing tools to assist in clinical decisions and reducing workloads. Despite that, most AI models are designed to handle single tasks with uni-modal data, which does not align well with the multifaceted nature of medical professional responsibilities. This paper introduces a novel AI model, RO-LLaMA, which operates as a generalist LLM specifically for the clinical workflow in radiation oncology.

Methodology

RO-LLaMA exhibits capabilities in three crucial areas: (1) efficiently summarizing comprehensive patient histories into concise clinical notes, (2) proposing treatment plans from a clinical expert perspective, and (3) delineating radiation target volumes directly from clinical reports. To enhance robustness against inevitable errors during sequential tasks, two pioneering techniques are introduced: Noisy Embedding Fine-Tuning (NEFTune), which injects noise into embeddings during training, and Consistency Embedding Fine-Tuning (CEFTune), which enforces prediction consistency between noisy and clean inputs. These techniques, when applied to 3D segmentation tasks, lead to Noisy Embedding Segmentation (NESEG) and Consistency Embedding Segmentation (CESEG), thereby boosting the model's generalization abilities.

Experiments and Results

A comprehensive set of experiments conducted on multi-centre cohorts established RO-LLaMA's promise. For text-related tasks like clinical report summarization and treatment plan suggestion, the model—augmented with NEFTune and CEFTune—outperformed baseline methods on both internal and external datasets. When assessing the 3D target volume segmentation task, RO-LLaMA, combined with NESEG and CESEG, advanced beyond traditional methods, validating its adeptness in multi-modal reasoning.

Discussion and Conclusion

RO-LLaMA is poised as a versatile, multifunctional tool that could revolutionize the integration of AI into routine medical workflows. It extends beyond current AI solutions, which are often constrained to uni-modal, single-task applications. This model's innovations in noise augmentation and consistency regularization may lead to the development of fully generalist medical AI models, capable of holistically grasping clinical workflows in departments such as radiation oncology.