Emu Video: Advancing Text-to-Video Generation via Image Conditioning

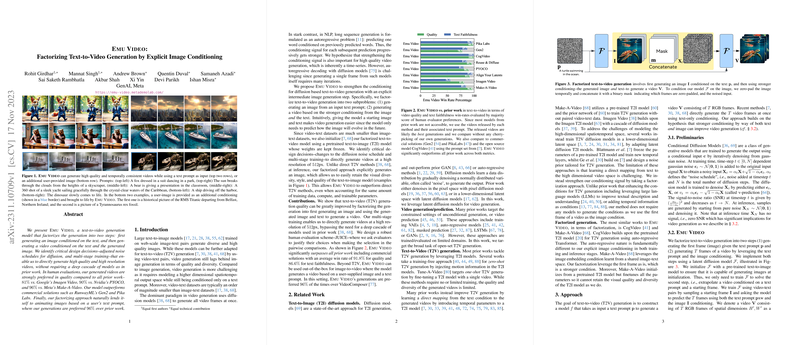

The paper proposes a novel framework for text-to-video (T2V) generation, titled Emu Video, which leverages an innovative two-step process to address challenges inherent to T2V tasks. Prior methods often struggle with the complex requirement of generating coherent videos from mere text inputs, primarily due to the higher dimensionality of video data compared to static images. Emu Video aims to mitigate these issues by first generating an image conditioned on a textual prompt and subsequently producing a video conditioned on the generated image and the text. This approach strengthens the generation's conditioning signals and enhances temporal consistency and visual quality.

Methodology and Model Architecture

Emu Video opts for a factorized generation approach, avoiding the cumbersome cascade of diffusion models typically employed in previous works like Imagen Video and others. The model bifurcates the T2V task into:

- Text-to-Image Generation: Utilizing a pre-trained text-to-image (T2I) model, the system first creates a static image corresponding to the prompt.

- Image and Text-Conditioned Video Generation: This step leverages the generated image alongside the text to produce a video. This inherently robust conditioning facilitates improved temporal coherence and detail fidelity in the resulting video.

The model architecture is underpinned by a latent diffusion framework, initialized with spatial parameters from a pre-trained T2I model, which are kept frozen during the entire T2V training. The temporal aspects of video generation are managed by introducing additional temporal convolution and attention parameters, trained selectively to adapt the model for dynamic sequences while retaining spatial quality.

Design Innovations

- Factorization and Conditioning: By explicitly conditioning on an intermediate image, the model enhances temporal consistency, thereby simplifying the video generation task as the model evolves the provided image over time.

- Zero Terminal-SNR Noise Schedule: This adjustment in noise scheduling during the diffusion process is crucial for high-resolution video generation, as it corrects the train-test discrepancy common in generative frameworks.

- Multi-Stage Training: A strategic separation of training into distinct stages—initial low-resolution, high-frame-rate training followed by high-resolution finetuning—effectively balances computational efficiency and qualitative output.

Empirical Validation

The empirical results underscore Emu Video's superiority over contemporary techniques. The model demonstrates marked improvements across human evaluations, achieving preferences of 81% over Imagen Video and 96% over Make-A-Video based on quality metrics. Notably, Emu Video also excels in image animation tasks, outperforming specialized models with a 96% preference rate, suggesting its versatility and robustness.

Implications and Future Directions

The approach introduced in this work provides promising advancements in the domain of generative models, particularly T2V. The enhanced conditioning mechanism opens new pathways for generating more semantically aligned and visually coherent video sequences. It also can extend to various applications like creative content generation and immersive storytelling.

By maintaining the architectural complexity at bay and achieving superior generation quality, the Emu Video framework suggests a blueprint for future research in video generation. The use of an initial image as a strong conditioning factor might be further explored in autoregressive setups or could be integrated with real-world user-generated images, offering personalized video creation capabilities.

Conclusion

In conclusion, the Emu Video framework marks a significant stride in text-to-video generation. Its unique leverage of an intermediary image to bolster conditioning signals aligns well with the intricate demands of video synthesis, propelling the state-of-the-art forward by simultaneously simplifying the generative process and elevating the output quality and consistency. This work sets a promising precedent for further explorations into factorized generative models and their potential applications across AI-driven domains.