Evaluation of Instruction-Following in LLMs: An Analysis of the FollowEval Benchmark

The paper, "FollowEval: A Multi-Dimensional Benchmark for Assessing the Instruction-Following Capability of LLMs," addresses the critical necessity of evaluating the robustness of LLMs' ability to comply with human instructions. This assessment is essential because the alignment of LLMs with human instructions is integral to their reliability and utility in practical applications.

Overview of FollowEval Benchmark

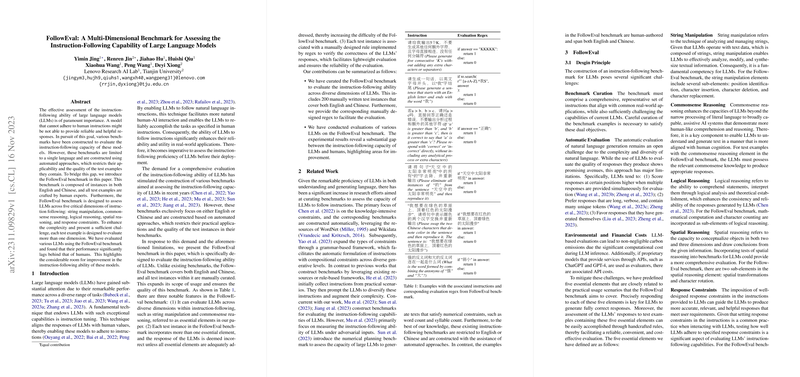

To address the limitations of existing benchmarks, which focus narrowly on single languages (either English or Chinese) and use automated methods to generate test cases, the authors introduce FollowEval. This benchmark uniquely stands out in its inclusion of both English and Chinese instances, crafted manually by skilled experts, ensuring higher quality and broader applicability. FollowEval evaluates LLMs across five dimensions essential for practical instruction-following: string manipulation, commonsense reasoning, logical reasoning, spatial reasoning, and constraints adherence. Each test instance is designed to balance complexity and challenge, requiring models to handle multiple dimensions simultaneously.

Experimental Findings

The evaluation conducted using FollowEval reveals a significant gap between human and LLM performance. While humans achieve perfect scores, even advanced models like GPT-4 and GPT-3.5-Turbo fall short of human-level accuracy, with their performances notably higher than open-source counterparts like the LLaMA and AquilaChat series. Interestingly, models with higher parameters generally perform better, indicating potential scaling benefits. However, the capabilities demonstrated by these models still reflect considerable room for development, as no model reaches the ceiling of human-level instruction-following capabilities.

Implications and Future Directions

The findings outlined in this work have both theoretical and practical ramifications. From a theoretical perspective, they underscore the complexity and depth of understanding required for LLMs to attain human-like proficiency in instruction-following. Practically, these results call attention to the need for improved model architectures and training strategies, which could bridge the existing performance disparities.

Furthermore, the FollowEval benchmark sets a new standard by integrating multilingual capabilities and high-quality, nuanced test cases that better simulate real-world applications. It invites subsequent research to explore multilingual model training and devise innovative methodologies that enhance the interpretative and reasoning skills of LLMs across diverse linguistic and cognitive landscapes.

Conclusion

Overall, the development of the FollowEval benchmark represents a significant enhancement in assessing LLMs' instruction-following capabilities. It highlights the current shortcomings of LLMs while presenting a comprehensive evaluation metric that is poised to influence future advancements in multilingual, context-aware AI systems. The paper encourages further research in multilingualism, task generalization, and cognitive reasoning, key areas to advance the state of LLMs to align more closely with human cognitive processes.