Overview of FollowBench: A Multi-level Fine-grained Constraints Following Benchmark for LLMs

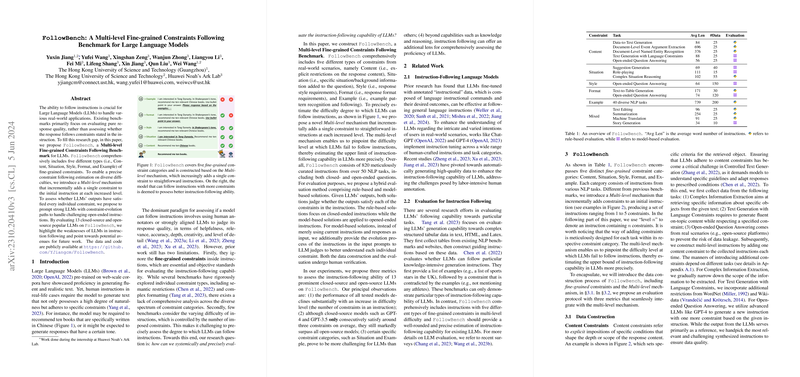

The paper presents FollowBench, a novel benchmark designed for evaluating the instruction-following capabilities of LLMs. This benchmark addresses a significant gap in current evaluation methods, which primarily focus on response quality without exploring how well models adhere to specific constraints laid out in instructions. FollowBench's design is predicated on multi-level, fine-grained constraints across various categories, offering a more nuanced and comprehensive assessment of LLMs' performance in real-world scenarios.

Key Contributions

The primary contribution of FollowBench lies in its structured and multifaceted evaluation approach. It encompasses five distinct categories of constraints: Content, Situation, Style, Format, and Example. Moreover, the benchmark employs a Multi-level mechanism to introduce progressively challenging constraints, allowing for an incremental evaluation of LLMs' capabilities.

- Content Constraints: These involve explicit requirements regarding the response content's depth or scope. The ability to follow content constraints is crucial in tasks such as controlled text generation, highlighting the model's capacity to adhere to predefined conditions.

- Situation Constraints: These pertain to specific situational or background contexts that guide appropriate model responses. This is particularly relevant in applications like role-playing and suggestion generation, where understanding nuanced contexts is critical.

- Style Constraints: Here, the models are assessed on their ability to generate text with stylistic variations, such as tone and formality. This category evaluates the model's proficiency in maintaining stylistic consistency across different contexts.

- Format Constraints: These constraints focus on the structural and presentational aspects of the output. Handling format constraints effectively is indicative of a model's ability to navigate intricate specifications, which is essential for tasks requiring structured outputs like tables or JSON.

- Example Constraints: This novel category examines a model’s robustness in following patterns from few-shot examples, even when additional "noise" examples are introduced.

Furthermore, FollowBench introduces a unique scoring mechanism with three novel metrics: Hard Satisfaction Rate (HSR), Soft Satisfaction Rate (SSR), and Consistent Satisfaction Levels (CSL). These metrics facilitate a detailed evaluation of constraint satisfaction across various levels, providing insight into the upper limits of LLMs' instruction-following abilities.

Experimental Insights

The research evaluates ten prominent LLMs on FollowBench, revealing significant disparities among them in terms of instruction adherence. Closed-source models like GPT-4 and GPT-3.5 demonstrate a marked advantage over open-source counterparts, suggesting that proprietary models benefit from more diverse datasets and refined optimization strategies. The notable decrease in performance with increasing difficulty levels underscores the persistent challenges LLMs face in complex instruction adherence.

Implications and Future Directions

FollowBench offers a comprehensive framework for scrutinizing the instruction-following proficiency of LLMs, which has implications for their deployment in user-interactive applications. The multi-level design and diverse constraint categories illuminate specific areas where LLMs excel or falter, guiding future enhancements in model training regimes and evaluation strategies.

The findings accentuate the need for continual advancements in LLMs, particularly in handling constraints that require deep contextual understanding and adaptability. The benchmark opens avenues for research into more sophisticated training methodologies that can boost the models’ proficiency in these dimensions.

In summary, FollowBench emerges as a critical tool in the evaluation landscape of LLMs, providing a granular perspective on their ability to follow intricate human instructions. By pinpointing areas of strength and weakness, it paves the way for targeted improvements, ultimately contributing to the development of more reliable and versatile linguistic models.