Summary of "Self-Improving for Zero-Shot Named Entity Recognition with LLMs"

The paper explores a novel, training-free framework to enhance zero-shot Named Entity Recognition (NER) capabilities using LLMs. By leveraging the self-learning abilities of LLMs in a resource-constrained setting, the authors propose a self-improving paradigm that significantly boosts performance over existing zero-shot approaches.

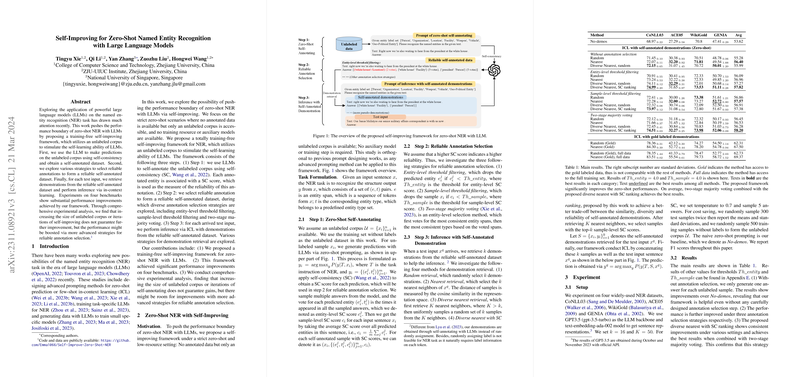

Framework Overview

The proposed framework operates under strict zero-shot conditions, where no annotated data or auxiliary models are available, and consists of three main steps:

- Zero-Shot Self-Annotating:

- The framework uses LLMs to generate predictions on an unlabeled corpus. The process employs self-consistency (SC) to produce a self-annotated dataset, with each entity prediction accompanied by a self-consistency score that indicates the reliability of the annotation.

- Reliable Annotation Selection:

- Several strategies are employed to filter the self-annotated data, enhancing annotation reliability. These include:

- Entity-level threshold filtering: Discards entity annotations with SC scores below a pre-defined threshold.

- Sample-level threshold filtering: Eliminates entire samples if their average SC score falls below a specified benchmark.

- Two-stage majority voting: Selects the most consistent entities and their corresponding types.

- Several strategies are employed to filter the self-annotated data, enhancing annotation reliability. These include:

- Inference with Self-Annotated Demonstrations:

- For each test input, the framework retrieves demonstrations from the reliable self-annotated dataset, facilitating inference through in-context learning. Various retrieval strategies, including nearest neighbor and diverse sampling methods, are analyzed in the context of their performance.

Results and Implications

Experimental evaluation on four benchmark datasets reveals that this framework provides substantial performance gains compared to baseline approaches that do not utilize self-improving techniques. Importantly, the paper finds that simply increasing the size of the unlabeled corpus or iterations of self-improvement doesn't guarantee further performance optimization. Instead, improvements might be achievable through more sophisticated methods for selecting reliable annotations.

Technical Insights and Implications

- The reliability of the self-annotation is crucial, underscored by the analysis of SC scores and the proposal of varied filtering thresholds.

- The diverse nearest SC ranking method introduced by the authors strikes a better balance between similarity, diversity, and annotation reliability.

- When comparing against available gold-labeled data, the gap highlights areas where future advancements could seek to refine self-improvement strategies more effectively.

Future Directions

The paper suggests several avenues for future research:

- Exploration of advanced annotation selection strategies to improve the quality of self-labeled data.

- Extending the self-improving paradigm to other information extraction tasks and beyond lexical entities.

- Investigating the utility of additional LLM capabilities, such as self-verification, to mitigate inherent annotation noise.

Overall, the paper introduces an innovative paradigm for zero-shot NER, enhancing LLM self-improvement capabilities without the requirement for extensive computational resources or large-scale training datasets. The findings underscore the potential of LLMs in zero-shot settings while providing a foundation for more refined self-improving techniques.