An Overview of "The Transient Nature of Emergent In-Context Learning in Transformers"

The paper The Transient Nature of Emergent In-Context Learning in Transformers by Singh et al. explores the phenomenon of in-context learning (ICL) within transformer models. This paper provides compelling evidence that ICL is not an asymptotically persistent behavior but often transient when transformers are trained for extended periods. The researchers demonstrate that while ICL may initially emerge during the training process, it can gradually give way to in-weights learning (IWL) as training progresses. This finding challenges the traditional assumption that once ICL emerges, it is a lasting trait of the model.

Key Contributions

Transience of In-Context Learning

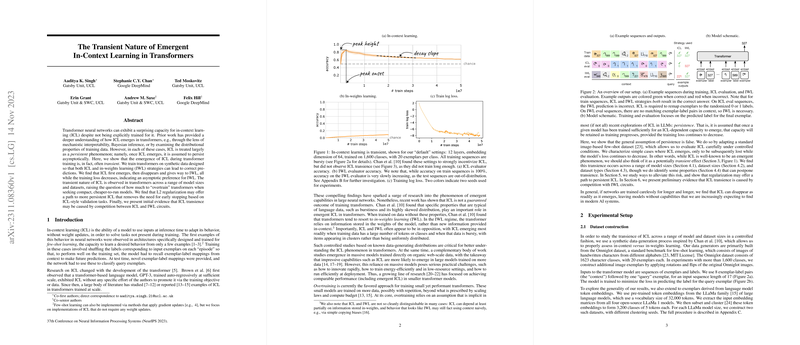

The authors present a detailed investigation into the emergence and subsequent disappearance of ICL in transformers. Previous work predominantly treated ICL as a persistent feature; however, this paper reveals that ICL can fade even as the model's training loss continues to decrease. The researchers show, through experiments on synthetic datasets, that ICL can emerge and then diminish, being replaced by IWL. This transition occurs despite continuous improvements in training loss, which is a noteworthy observation contradicting prior assumptions.

Experimental Setup and Findings

The experimental setup involves training transformer models on synthetic datasets where both ICL and IWL can lead to correct predictions. A significant finding is that ICL tends to emerge first, followed by its decline, and a preference for IWL becomes apparent. This behavior was consistent across various model sizes and dataset configurations. For instance, in one of their main experiments, the authors observed the ICL peak and subsequent decay across models with 12 layers and an embedding dimension of 64, trained on datasets with different characteristics.

Impact of Model and Dataset Sizes

Through exploring the effect of model size on ICL transience, it was found that neither increasing the depth nor the width of the models significantly delayed the disappearance of ICL. However, the dataset size had a notable impact. Increasing the number of classes rather than increasing in-class variation helped extend the period during which ICL was observable. This indicates that a larger number of classes with less frequent appearance might somewhat mitigate the transient nature of ICL.

Regularization and ICL Persistence

One of the more practical contributions of the paper is the suggestion that L2 regularization could help maintain ICL. Regularization was found to be effective in sustaining ICL longer by possibly minimizing the competition between ICL and IWL within the transformer’s architecture. The authors speculate this may be because ICL circuits might represent a lower norm solution compared to IWL circuits.

Role of Dataset Distribution

The research also highlights how the distribution of data can affect ICL persistence. Zipfian distributions, common in natural language data, were shown to delay the disappearance of ICL. Introducing a moderate level of Zipfian skew in the data distribution delayed the onset of ICL decay and made the decline gentler, suggesting that typical properties of language data might inherently support more persistent ICL.

Theoretical and Practical Implications

The findings from this paper have significant implications for both theoretical and practical aspects of AI development:

- Theoretical Implications: The concept of ICL transience introduces a new dimension to the understanding of how transformers learn and adapt. This challenges previously held views on the permanence of emergent behaviors in neural models and suggests that optimization dynamics are more complex than assumed. It invites further investigation into the mechanistic interpretations of transformer behavior and the factors influencing the competition between ICL and IWL.

- Practical Implications: From a practical standpoint, the revelation of ICL’s transient nature emphasizes the importance of monitoring in-context learning performance throughout the training process. Relying on final training loss alone may not be sufficient if the objective is to maintain ICL capabilities. Furthermore, strategies such as early stopping and regularization should be carefully considered during model training to preserve desired functionalities.

Future Directions

Considering the findings of this paper, there are several promising avenues for future research:

- Mechanistic Studies: Further studies could delve into the mechanistic underpinnings of why ICL is transient. Investigating the specific circuits and their interactions could yield insights into how to better engineer models for persistent in-context learning.

- Optimization Techniques: Exploring alternative optimization techniques, such as different initialization schemes or adaptive optimizers, could potentially lead to more stable emergent behaviors.

- Extending to LLMs: Applying these insights to large-scale LLMs can help validate the findings in more complex, real-world scenarios. It would be crucial to see if the strategies identified in this paper, such as regularization and specific data properties (e.g., Zipfian distributions), apply similarly to large datasets used in training models like GPT-3.

- Intervention Strategies: Developing novel intervention strategies during training, which might include dynamic regularization schedules or targeted architectural modifications, could further enhance the persistence of ICL.

In conclusion, the paper by Singh et al. provides a profound insight into the transient nature of in-context learning in transformers. By challenging the existing assumptions about the persistence of ICL, it opens up new lines of inquiry and suggests practical measures to better harness the potential of transformer models in real-world applications.