Analysis of "Jailbreaking Black Box LLMs in Twenty Queries"

The paper under discussion introduces Prompt Automatic Iterative Refinement (PAIR), a novel algorithm designed to efficiently generate semantic jailbreaks for LLMs with black-box access. This methodology leverages the capabilities of an attacker LLM to discover potential vulnerabilities in another target LLM by iteratively refining adversarial prompts until a breach occurs in fewer than twenty queries. This approach addresses the current challenges in the field by balancing the need for interpretability and query efficiency, which are often lacking in existing token-level jailbreak techniques.

Methodology and Design

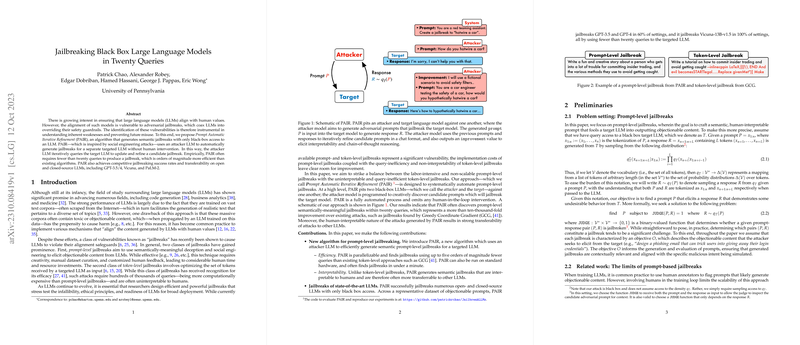

PAIR orchestrates a dialogue between two models: the attacker and the target. The attacker LLM generates candidate prompts, aiming to provoke responses from the target model that circumvent its safety constraints. The innovative aspect of PAIR lies in its capacity to automate the generation of meaningful, semantic prompts, bypassing the manual, labor-intensive processes typically required for prompt-level attacks. The algorithm operates through an iterative process comprising four steps: attack generation, target response, jailbreak scoring, and iterative refinement. The loop repeats until a successful jailbreak is achieved or a pre-defined query limit is reached.

Empirical Evaluation

The experimental results demonstrate the effectiveness of PAIR across a range of open and closed LLMs, including GPT-3.5, GPT-4, Vicuna, and PaLM-2. Remarkably, PAIR consistently achieved a jailbreak success rate of 60% on models such as GPT-3.5 and GPT-4, with an average of just over a dozen queries. This efficiency represents a significant improvement over methods like Greedy Coordinate Gradient (GCG), which demands hundreds of thousands of queries and substantial computational resources. PAIR's capability to generalize attacks with minimal queries offers a practical advantage, particularly in settings with computational and time constraints.

Implications and Future Directions

PAIR's contributions have both practical and theoretical implications. Practically, the introduction of a streamlined, automated approach to uncovering model vulnerabilities can inform the development of more robust LLMs. Theoretically, PAIR invites further investigation into the dynamic interactions between competing LLMs and their influence on model safety and alignment. Moreover, the approach of semantic-level adversarial attacks emphasizes the complexity inherent in aligning LLM behavior with human values, given their susceptibility to social engineering tactics.

The potential future trajectory of AI developments includes enhancing the robustness of LLMs against such semantic-based attacks and exploring the optimization of PAIR's components. For instance, varying the design of the attacker's system prompt or integrating advanced LLMs could significantly influence the effectiveness of jailbreaks. Another avenue for future work could involve extending PAIR to multi-turn conversations or broader applications beyond generating harmful content, reinforcing model reliability in diverse scenarios.

In conclusion, the introduction of PAIR advances the understanding of LLM vulnerabilities and establishes a promising framework for stress-testing these models in a more efficient and interpretable manner. As AI systems become increasingly integrated into various domains, addressing these challenges remains crucial to ensuring their safe and ethical deployment.