The paper introduces a method for improved feature extraction from German business documents using extractive question answering (QA) models integrated into an information retrieval system (IRS). The work addresses the challenge of automating feature extraction from unstructured text data in business processes, such as customer service, insurance claims assessment, and medical literature review. The authors highlight the limitations of generative LLMs (LLMs) due to their tendency to hallucinate, making them less reliable for document analysis compared to extractive QA models. The paper focuses on fine-tuning existing German QA models to enhance their performance in extracting complex linguistic features from domain-specific documents.

The authors investigate the use of QA models for extracting complex information from textual documents in specific industrial use cases, the influence of fine-tuning QA models on performance across different domains and textual features, and the appropriateness of metrics for automated performance evaluations that resemble human expert examination.

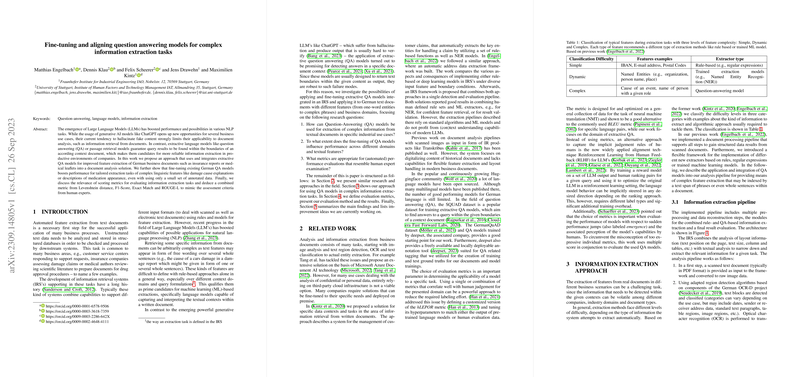

The paper classifies the difficulty levels of feature extraction into three categories: Simple (e.g., IBAN, email addresses), Dynamic (e.g., named entities), and Complex (e.g., cause of an event). QA models are particularly suitable for extracting complex features that are difficult to define with rule-based approaches.

The proposed information extraction pipeline includes several steps:

- Converting scanned text documents to raw image data.

- Detecting and classifying text blocks using region detection algorithms and OCR (optical character recognition).

- Saving the results as an extended document model containing region, text content, and coordinates.

- Restricting the search scope to relevant text regions using a rule-based approach.

- Querying the QA model with the extracted text and a suitable question.

- Performing a rule-based validation of the model answer.

For domain-specific QA fine-tuning, the authors used the model gelectra-large-germanquad, which was pre-trained on GermanQuAD. They constructed two distinct datasets: a drug leaflet dataset consisting of 170 medication leaflet documents with three QA pairs per document (Ingredient, Look, Application) and an elemental damage report dataset consisting of 47 elemental damage reports with two QA pairs per document (Damage Cause, Assessor Name). The documents were annotated using the QA annotation tool Haystack.

The fine-tuning process involved 5-fold cross-validation with 80%/20% train/test splits to find the optimal hyperparameter settings, including epoch number, batch size, learning rate, and doc stride. Model performance was compared before and after fine-tuning using automatically computable metrics.

The evaluation metrics used in the paper include:

- Manual Expert Assessment: A ground-truth baseline where model answers are manually evaluated by experts.

- EM (Exact Match): Measures the exact agreement of the model output with regard to the labeled answer(s) on a character basis.

$\mathcal{L}_{EM}^{(k)} = \min\bigg\{1,\; \sum_{i=1}^{N_k} \mathbbold{1}\Big( \hat{y}^{(k)} = y_i \Big)\bigg\}$

where:

- is the exact match score for question

- is the number of annotators for question

- is the model's response to question

- is the labeled answer from annotator for question

- $\mathbbold{1}$ is the indicator function

- Levenshtein Distance: Measures the amount of operations (insertion, deletion, substitution) that separate two strings of characters.

- F1-score: Computes the word-wise contribution between precision and recall.

$\mathcal{L}_{F1}^{(k)} = \frac{1}{N_k} \sum_{i=1}^{N_k} \frac{2}{\frac{|\mathcal{S}_{\hat{y}^{(k)}|}{|\mathcal{S}_{y_i}^{(k)} \bigcap \mathcal{S}_{\hat{y}^{(k)}|} + \frac{|\mathcal{S}_{y_i}^{(k)}|}{|\mathcal{S}_{y_i}^{(k)} \bigcap \mathcal{S}_{\hat{y}^{(k)}|}$

where:

- is the F1-score for question

- is the number of annotators for question

- is the set of distinct words in the model prediction for question

- is the word-set of one of the labeled answers

- is the set size, i.e. number of unique elements (words) in the set

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation)-L: Looks for the longest common subsequence in the n-grams of two given sequences.

- Weighted Average: A weighted average of the automated metrics as a single score.

where:

- is the weighted average score

- is the set of metrics {EM, Lev, F1, RGE}

- are the weights for each metric

- is the value of each metric

The experimental setup involved training two final models, one for the leaflet document use case and one for the damage report use case, using 80% of the data for training and 20% for testing. Model performances were compared before and after the training process.

The results indicate a notable increase in model performance for the specific tasks, with varying degrees of improvements among the different datasets and questions. The fine-tuned models showed significant improvements in extracting features like "Look" from the leaflet dataset and "Assessor Name" from the damage report dataset. The F1 and ROUGE-L metrics showed similar trends, while the EM (Exact Match) metric did not provide much insight into human result usefulness. The weighted average score, combining Levenshtein, ROUGE-L, and F1, closely approximated the manual human expert evaluation.

The authors trained a linear model to predict the importance coefficients of the individual metrics to resemble the manual expert assessment score. The model accurately reconstructed the human scoring with 93.87% accuracy. However, a generalization of this approach over datasets and tasks from different domains could not be observed.

In conclusion, the paper demonstrates that applying extractive QA models for complex feature extraction in industrial use cases leads to good performance. Fine-tuning these QA models significantly improves performance and supports document analysis automation. A weighted average of Levenshtein, ROUGE-L, and F1 effectively approximates manual human expert evaluation.

Future work will focus on further fine-tuning QA models, optimizing prompts, experimenting with multiple-choice questions, improving page segmentation and region detection, applying rule-based post-validation strategies, and investigating multi-modal QA models. The authors also plan to integrate the best results and models into their industrial platform solution Aikido.