The paper "Enhancing LLM Performance To Answer Questions and Extract Information More Accurately" explores techniques to improve the accuracy of LLM question answering, especially in the financial domain. The central problem addressed is the propensity of LLMs to "hallucinate" or provide inaccurate information, which is unacceptable in contexts requiring high precision, such as financial analysis. The authors employ fine-tuning methods and Retrieval Augmented Generation (RAG) to mitigate these issues.

The paper begins by introducing the challenges associated with LLMs, including their tendency to generate incorrect responses. It highlights the importance of addressing these inaccuracies, particularly in fields like finance, where even minor errors can have significant consequences. The adaptability of LLMs through techniques like zero-shot learning, few-shot learning, and fine-tuning is discussed, setting the stage for the methodologies employed in the paper.

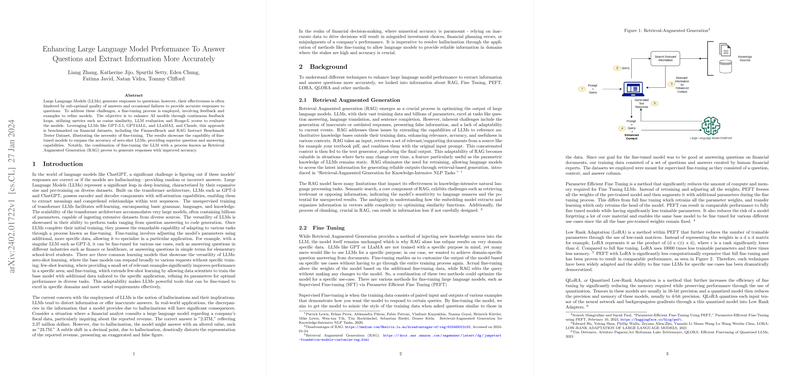

The paper investigates RAG, a technique designed to enhance the relevance and accuracy of LLM outputs by enabling them to reference external knowledge bases. RAG involves retrieving relevant documents and incorporating them into the input prompt, thereby grounding the LLM's responses in authoritative sources. The limitations of RAG, such as the potential for retrieving irrelevant information and the challenges associated with semantic search and chunking, are also acknowledged.

Fine-tuning is presented as another method for customizing LLM outputs for specific use cases. Unlike RAG, fine-tuning involves modifying the model's parameters using additional data. The paper discusses various fine-tuning methods, including Supervised Fine-tuning (SFT) and Parameter Efficient Fine Tuning (PEFT). SFT involves training the model on paired inputs and outputs, while PEFT aims to reduce the computational resources required for fine-tuning. Low Rank Adaptation (LoRA) and QLoRA (Quantized Low-Rank Adaptation) are introduced as techniques within PEFT that further reduce the number of trainable parameters and memory requirements.

The authors detail their process for enhancing question-answering accuracy through supervised fine-tuning of GPT-3.5 Turbo, reprompting on GPT4All and Llama2, and benchmarking with RAG. The absence of standardized methodologies for evaluating model performance is noted, and the evaluation metrics used such as cosine similarity and Rouge-L are described.

Two question-answering datasets were used for fine-tuning and testing: FinanceBench and RAG Instruct Benchmark tester. FinanceBench, developed by Patronus AI, comprises 10,231 questions about publicly traded companies, along with corresponding answers and evidence strings. The RAG Instruct Benchmark tester dataset, designed by LLMWARE, includes 200 questions with context passages from various 'retrieval scenarios' such as financial news and earnings releases.

A key aspect of the methodology is the preprocessing of data to conform to the specific formats required by different LLMs. For example, the LLaMA-2 model requires data to be formatted with specific tags indicating user vs. system prompts. The authors emphasize the importance of prompt engineering in guiding the model's behavior and reducing hallucinations.

To enhance both question-answering and retrieval abilities, the authors employed several techniques. For question-answering, they utilized human-curated datasets to fine-tune the models. For retrieval, they explored Forward-looking active retrieval augmented generation (FLARE), an active approach that prompts the LLM to generate a hypothetical response and uses both the query and hypothetical response to search for relevant chunks.

The evaluation method involved a detailed assessment using ROUGE-L, cosine similarity, and LLM evaluation. The authors tested multiple versions of each LLM, with varying amounts of labeled data, to assess the impact on performance. The trade-offs between open-source and closed-source LLMs, particularly with respect to data privacy and computational resources, are also discussed.

The results section presents plots showing the increase in Rouge score and cosine similarity score with increasing numbers of labels, demonstrating the effectiveness of fine-tuning. The conclusion reiterates the importance of human feedback and the limitations of simple RAG pipelines for domain-specific questions.

In terms of next steps, the authors suggest further tuning of model parameters, experimentation with different embedding models, and the incorporation of unsupervised fine-tuning and reinforcement learning with human feedback. They also plan to explore additional methods for improving retrieval algorithms, such as implementing a re-ranking algorithm and utilizing metadata annotations.