Enhancing Zero-Shot Chain-of-Thought Reasoning in LLMs through Logic

The research presented focuses on the challenge of improving the reasoning capabilities of LLMs by leveraging logical principles, particularly in the context of zero-shot Chain-of-Thought (CoT) reasoning. Despite their substantial success across diverse applications, LLMs frequently encounter issues such as hallucinations and untrustworthy deductions due to their inability to systematically employ logical principles during multi-step reasoning processes. This paper introduces the Logical Thoughts (LoT) prompting framework, which aims to enhance reasoning capabilities by systematically verifying and rectifying reasoning steps using principles rooted in symbolic logic, notably Reductio ad Absurdum.

The researchers identified a gap in the reasoning processes of LLMs: although these models possess extensive knowledge, they often fail to apply it systematically within coherent reasoning paradigms. This leads to logical errors and hallucinations, where LLMs might confidently assert incorrect statements. The LoT framework aims to address these issues by introducing a self-improvement mechanism that encourages models to think, verify, and revise reasoning steps iteratively.

Methodology and Implementation

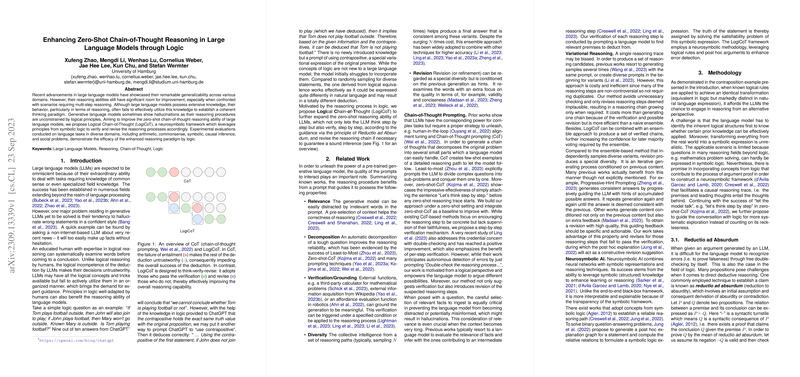

The LoT framework is constructed on the principle of Reductio ad Absurdum. This logical principle aids in establishing the validity of statements by assuming their negation and demonstrating a contradiction. Applying this, the authors propose a structured prompting strategy where an LLM is guided to generate and examine different perspectives of reasoning: the original reasoning step, its negation, and supporting explanations for both. The model then adopts the more plausible reasoning path based on these examinations.

LoT was implemented and evaluated on a variety of language tasks spanning arithmetic, commonsense reasoning, causal inference, and social reasoning, demonstrating the framework's versatility and effectiveness. The evaluation involved comparing the enhanced zero-shot CoT capabilities of models using LoT against baseline CoT models across several datasets and model sizes, including Vicuna and GPT iterations.

Results and Discussion

The results highlighted notable performance improvements when LoT was applied. Models using LoT generally outperformed the standard zero-shot CoT models across multiple datasets. For instance, the LoT approach showed increased accuracy in datasets like GSM8K, AQuA, and tasks involving complex commonsense reasoning. The performance gains were more consistent with larger model sizes, suggesting the enhanced capacity of these models to integrate and adapt logical corrections within their reasoning processes.

The findings also revealed that LoT prompts models to engage in deeper reasoning paths, correcting missteps and revising their conclusions more effectively than standard CoT. This indicates that the integration of logical verification steps leads to more reliable and accurate reasoning outputs. However, some limitations were observed, such as occasional revisions not aligning with ground truth answers due to the inherent bias in LLM generations.

Implications for AI and Future Research

The introduction of LoT provides a structured pathway to systematically improve the reasoning capabilities of LLMs by embedding logical verification into their decision-making processes. This has significant implications for the deployment of AI systems in applications demanding high accuracy and reliability, such as autonomous decision-making and expert systems in critical fields.

Future research could explore extending the LoT methodology into few-shot learning contexts or other NLU tasks that benefit from structured reasoning. Additionally, enhancing the efficiency of the verification process and exploring integration with neurosymbolic approaches could further improve the robustness of LLMs. Moreover, leveraging LoT in reinforcement learning frameworks like RLAIF could yield advances in aligning AI behaviors with human-like reasoning patterns, offering a pathway toward more autonomous and adaptive AI systems.