S-LoRA: Serving Thousands of Concurrent LoRA Adapters

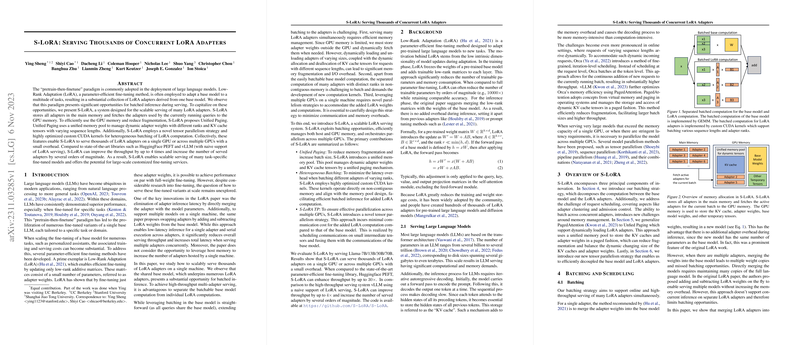

The pretrain-then-finetune paradigm in the deployment of LLMs has facilitated the development of numerous fine-tuned variants for specific tasks. Low-Rank Adaptation (LoRA) is a parameter-efficient fine-tuning method that updates only low-rank additive matrices while keeping the base model intact. This technique is not only resource-efficient but also highly effective. The paper focuses on the development and evaluation of a system called S-LoRA, designed to serve thousands of LoRA adapters efficiently.

Core Contributions

S-LoRA addresses several challenges inherent in the scalable serving of multiple LoRA adapters. Notable contributions include:

- Unified Paging: To mitigate GPU memory fragmentation and maximize memory utilization, S-LoRA introduces a unified memory pool. This pool manages both dynamic adapter weights and key-value (KV) cache tensors using a unified paging approach.

- Heterogeneous Batching: Custom CUDA kernels are implemented to support the heterogeneous batching of LoRA computations, accommodating varying sequence lengths and adapter ranks.

- Tensor Parallelism Strategy: A novel tensor parallelism strategy is introduced to manage LoRA computations efficiently across multiple GPUs, ensuring minimal communication overhead.

Technical Implementation

The architecture of S-LoRA is designed to achieve scalable serving of numerous adapters by partitioning the computation between the base model and the LoRA adapters. Key strategies implemented in S-LoRA include:

- Batching Strategy: The computation involving the base model is batched separately from the LoRA computations . This separation enables efficient memory management and minimizes computational overhead.

- Adapter Clustering: To optimize batching efficiency, requests that share the same adapter are prioritized, reducing the number of active adapters in each batch.

- Memory Management: The concept of Unified Paging extends PagedAttention to accommodate the dynamic loading and unloading of adapter weights, alongside managing variable-sized KV cache tensors.

- Prefetching and Overlapping: A dynamic prediction mechanism prefetches adapter weights required for the next batch, overlapping I/O with ongoing computations to reduce latency overhead.

Numerical Results

S-LoRA demonstrates substantial improvements over existing systems such as HuggingFace PEFT and vLLM with naive LoRA support. Key findings include:

- Throughput: S-LoRA improves throughput by up to 4 times compared to vLLM and by an order of magnitude compared to PEFT, while efficiently serving up to 2,000 adapters.

- Latency: The system maintains low latency, achieving first-token latencies favorable for real-time applications.

- Scalability: S-LoRA scales across multiple GPUs, maintaining efficiency and minimizing additional communication costs through effective tensor parallelism.

Implications

The efficient management and serving of numerous LoRA adapters have practical implications in scenarios requiring multiple task-specific fine-tuned models, such as personalized assistants and domain-specific models. The systems and techniques introduced in this paper could be extended to other parameter-efficient fine-tuning methodologies and model architectures beyond LoRA and transformers.

Future Directions

Further research may explore:

- Extended Adapter Methods: Incorporating additional parameter-efficient methods such as Prefix-tuning and Prompt Tuning.

- Advanced Memory Techniques: Enhancing memory management with more sophisticated paging and caching strategies.

- Multi-Stream Processing: Using multiple CUDA streams to parallelize base model and LoRA computations more effectively.

S-LoRA establishes a foundational framework for the scalable serving of fine-tuned LLM variants, enabling large-scale customized fine-tuning services vital to various machine learning applications. The paper's methodologies and results position S-LoRA as a significant advancement in the efficient serving of parameter-efficient fine-tuned models.