Overview of LLaSM: Large Language and Speech Model

The paper presents LLaSM, a large language and speech model designed to address limitations in current multi-modal models by incorporating speech as a critical interaction modality. The research introduces a novel approach to enhancing multi-modal interactions by integrating speech and language instructions, providing a more natural interaction with artificial intelligence systems.

Methodology

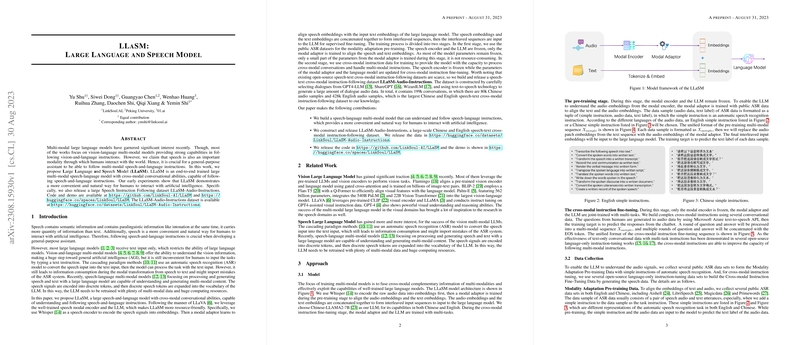

The authors introduce LLaSM as an end-to-end trained, multi-modal model that incorporates cross-modal conversational abilities. The architecture utilizes a pre-trained speech modality encoder—specifically, Whisper—alongside a LLM for embedding alignment. This is achieved through the use of a modal adaptor that aligns speech embeddings with text embeddings, allowing them to be processed together in a seamless interleaved sequence, enhancing the model's cross-modal processing capabilities.

The training process is divided into two stages:

- Modality Adaptation Pre-training: This phase involves freezing the speech encoder and LLM, focusing on training the modal adaptor using automatic speech recognition (ASR) datasets. The objective is to align text and audio embeddings without intensive resource consumption, as only the adaptor's parameters are updated.

- Cross-modal Instruction Fine-tuning: During this stage, the modal adaptor and LLM are fine-tuned using cross-modal instruction data. This phase emphasizes the model’s ability to handle conversations across modalities.

Data Collection

A significant contribution of the paper is the creation of the LLaSM-Audio-Instructions dataset, comprising 199,000 conversations and 508,000 samples. The dataset facilitates cross-modal instruction tuning by including both text and generated speech components, utilizing a variety of publicly available ASR datasets and text-to-speech technologies.

Key Results

The experiments conducted showcase LLaSM's proficiency in processing speech and language instructions in both English and Chinese. The direct integration of speech inputs without relying on conventional speech-to-text preprocessing steps enhances the model's efficiency and scope, supporting multiple languages and operational scenarios.

Implications and Future Work

The introduction of LLaSM addresses a critical gap in the existing landscape of multi-modal models by demonstrating the feasibility and benefits of integrating speech as a core interaction modality. It not only provides a more natural interaction framework for users but also sets the stage for further advancements in AI communication models.

The release of the LLaSM-Audio-Instructions dataset paves the way for future research in more sophisticated, cross-modal instruction-following systems. The paper suggests potential expansions in combining vision and audio modalities, indicating a path towards more holistic multi-modal AI systems.

By leveraging existing LLM and encoder technologies, LLaSM delivers a resource-efficient solution with significant practical applications in AI–human interaction. Further exploration in combining multiple sensory inputs could enhance general-purpose AI systems' capabilities, marking a significant step toward advanced human-AI interaction models.